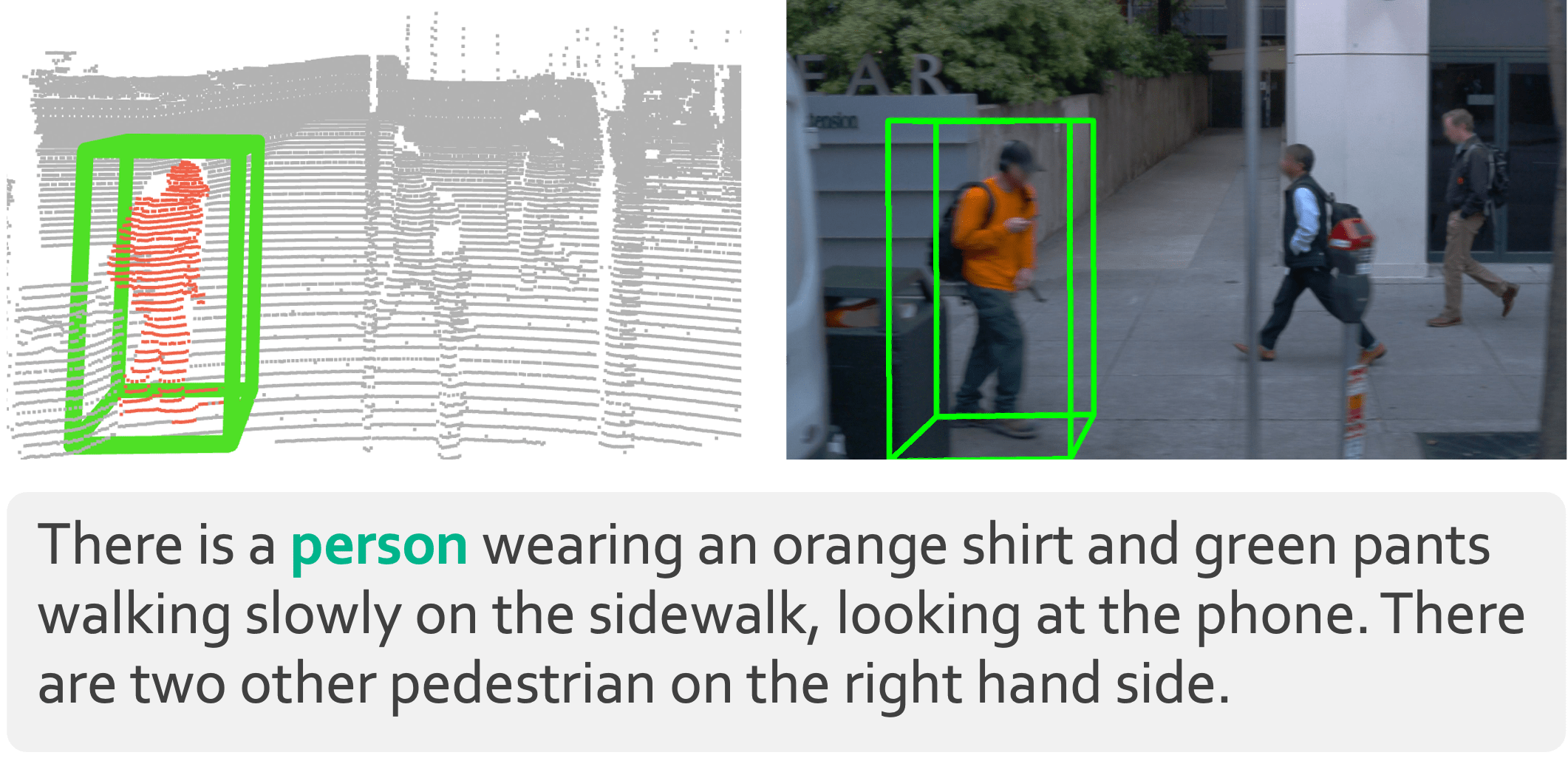

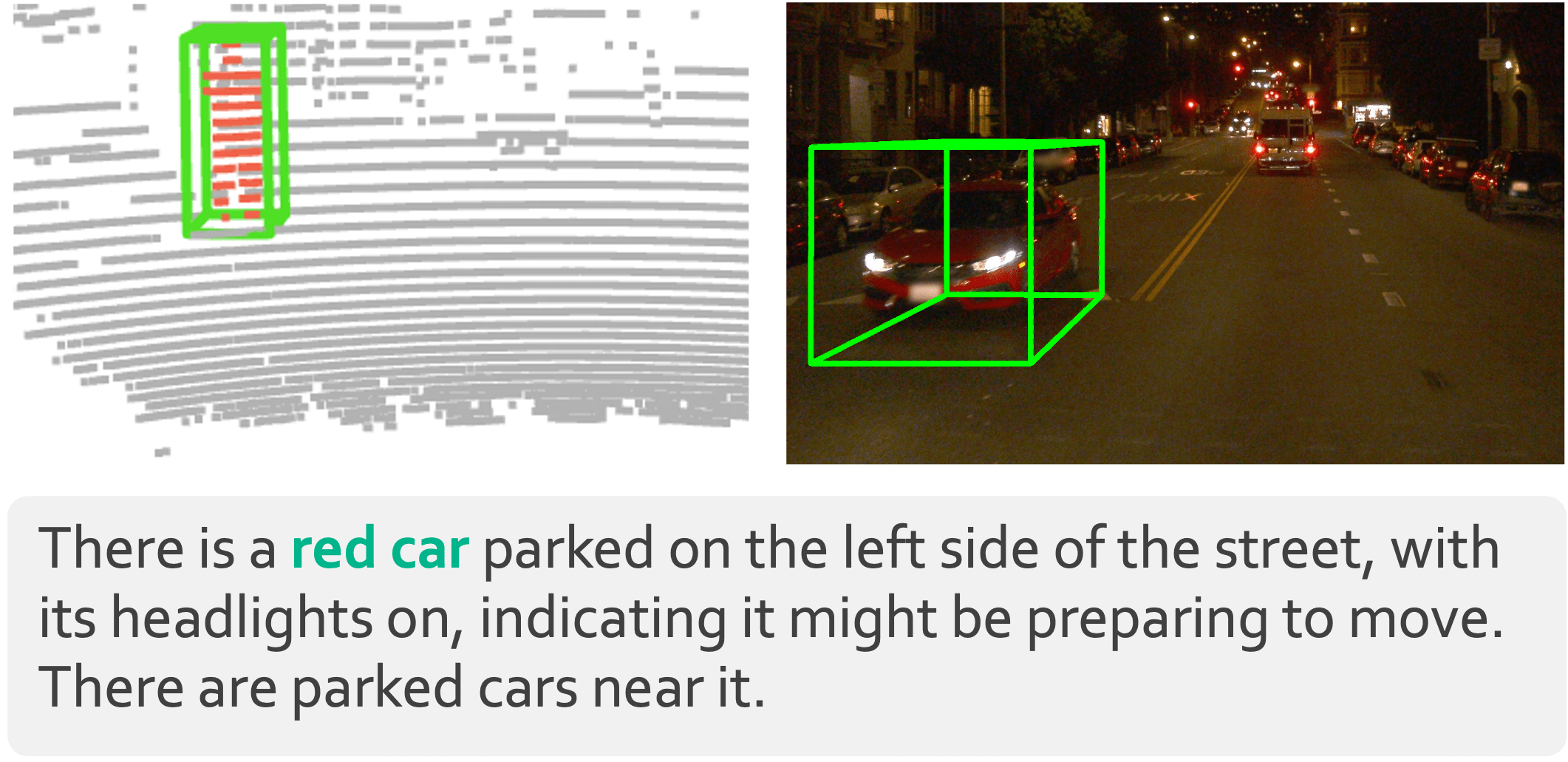

Visual grounding in 3D is the key for embodied agents to localize language-referred objects in open-world

environments.

However, existing benchmarks are limited to indoor focus, single-platform constraints, and small scale.

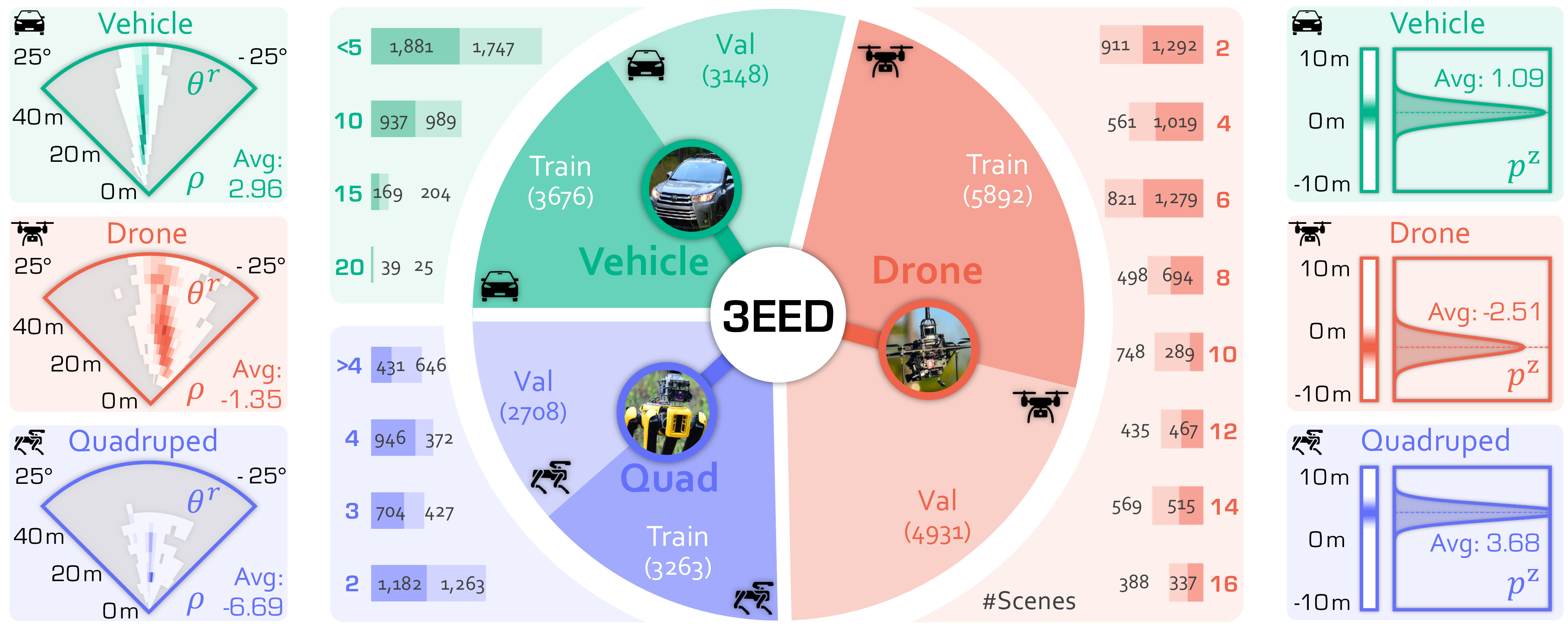

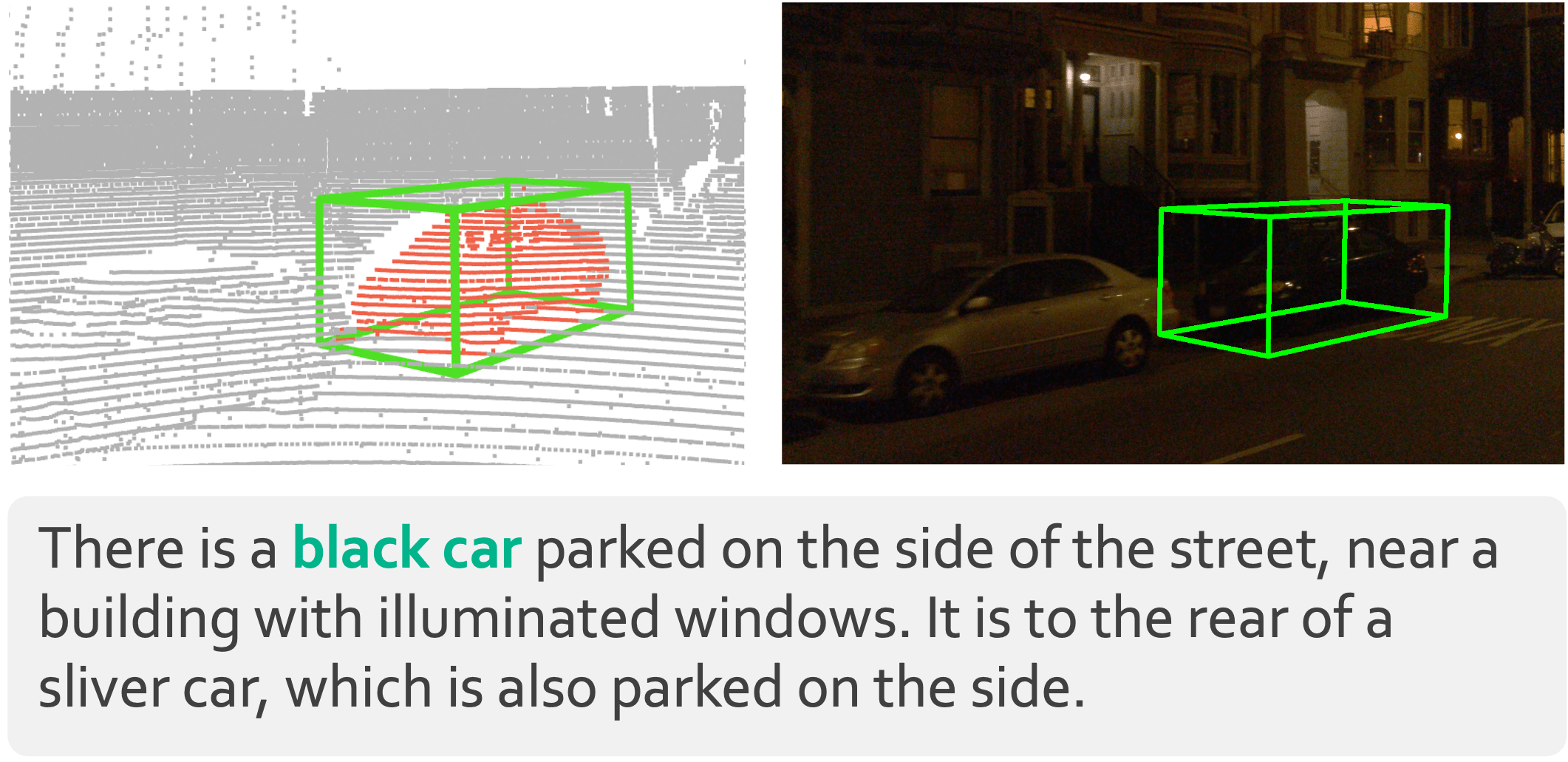

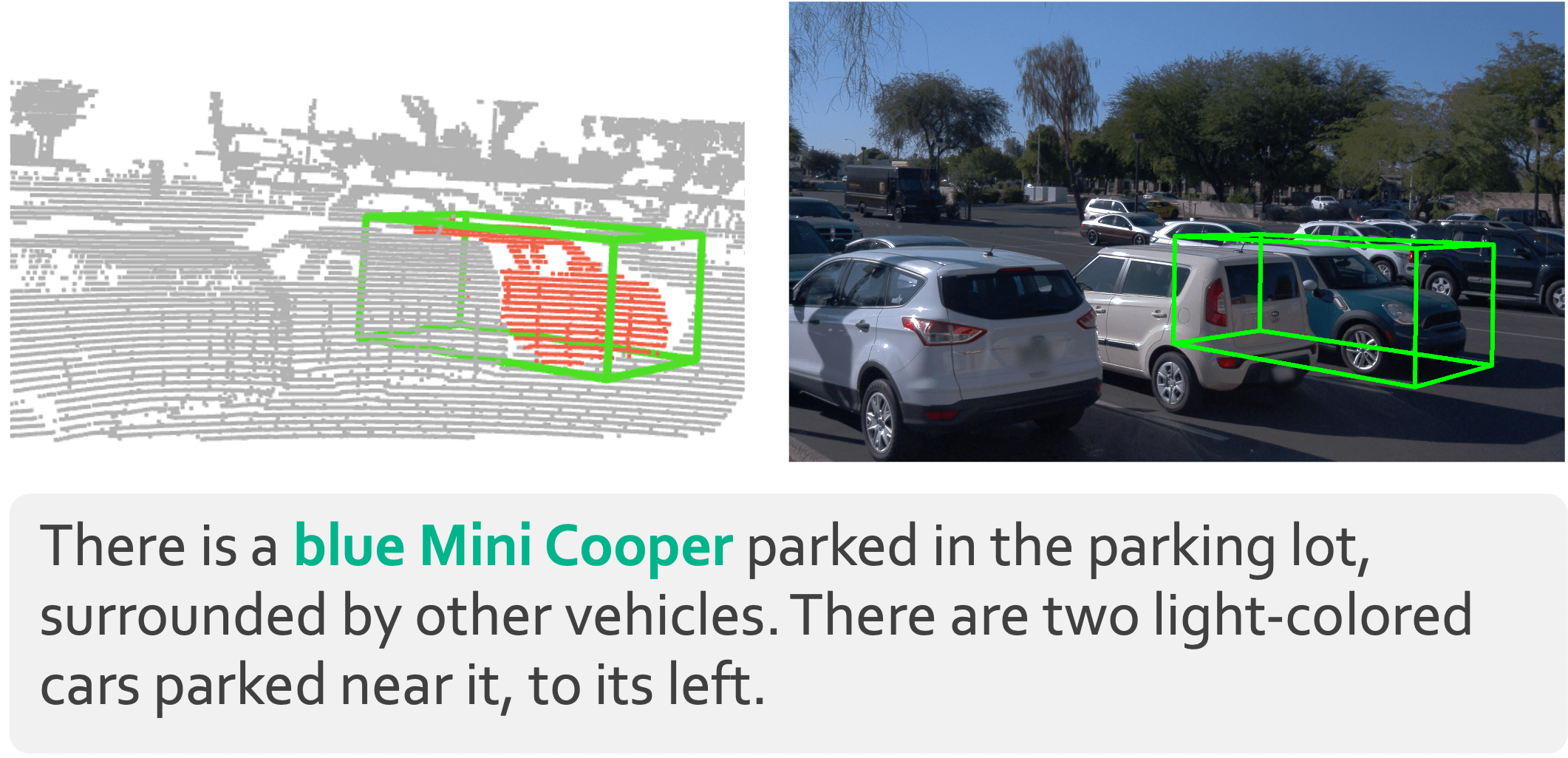

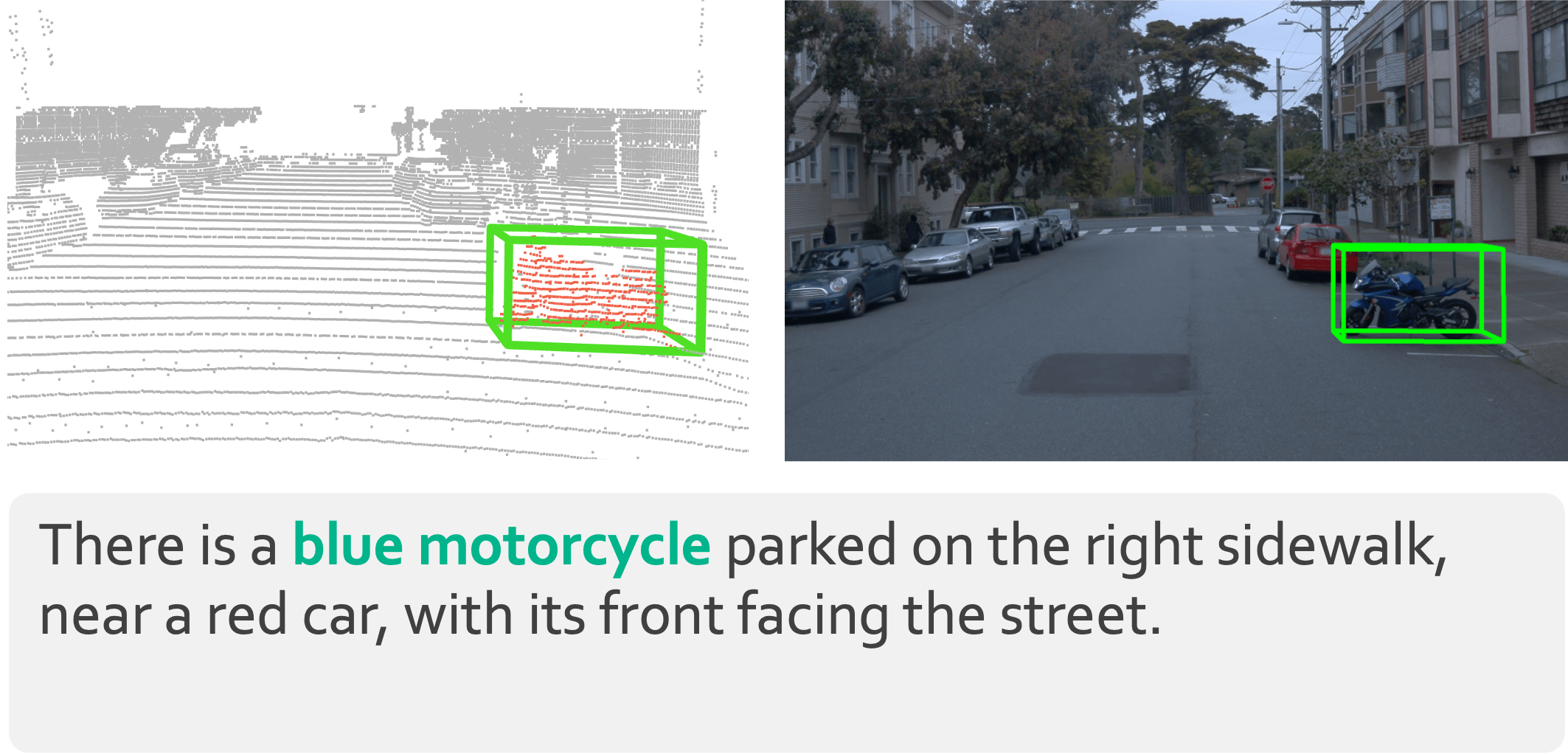

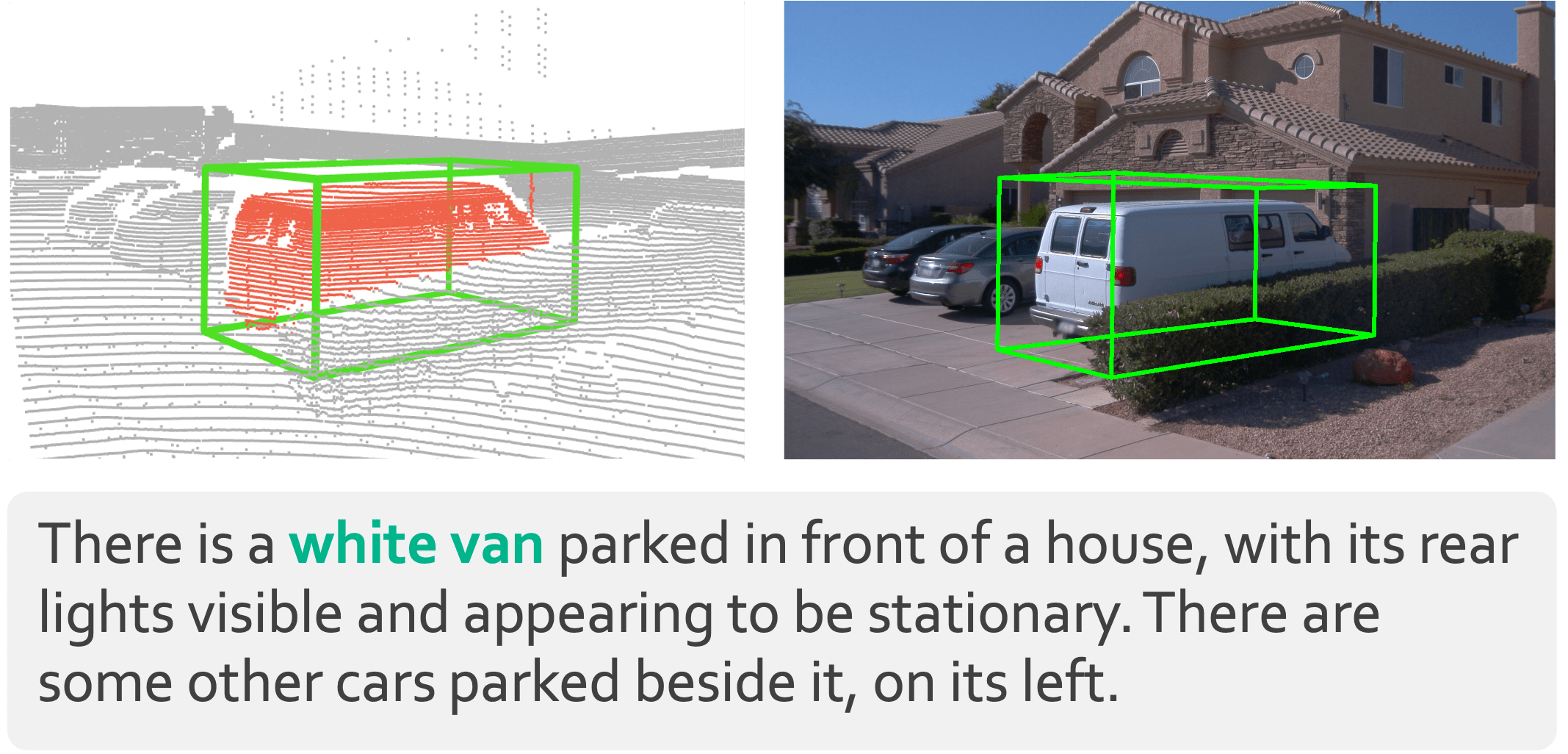

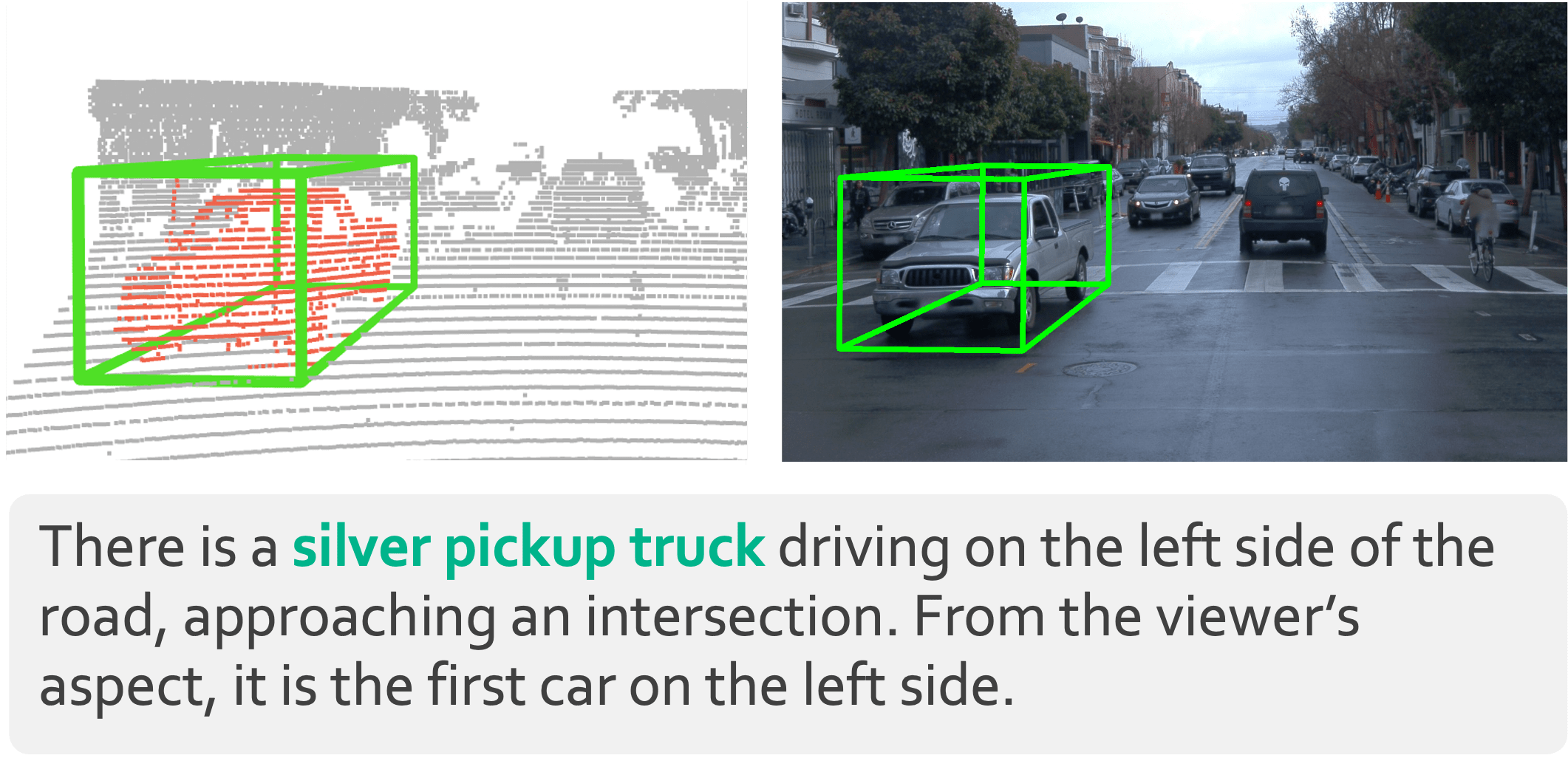

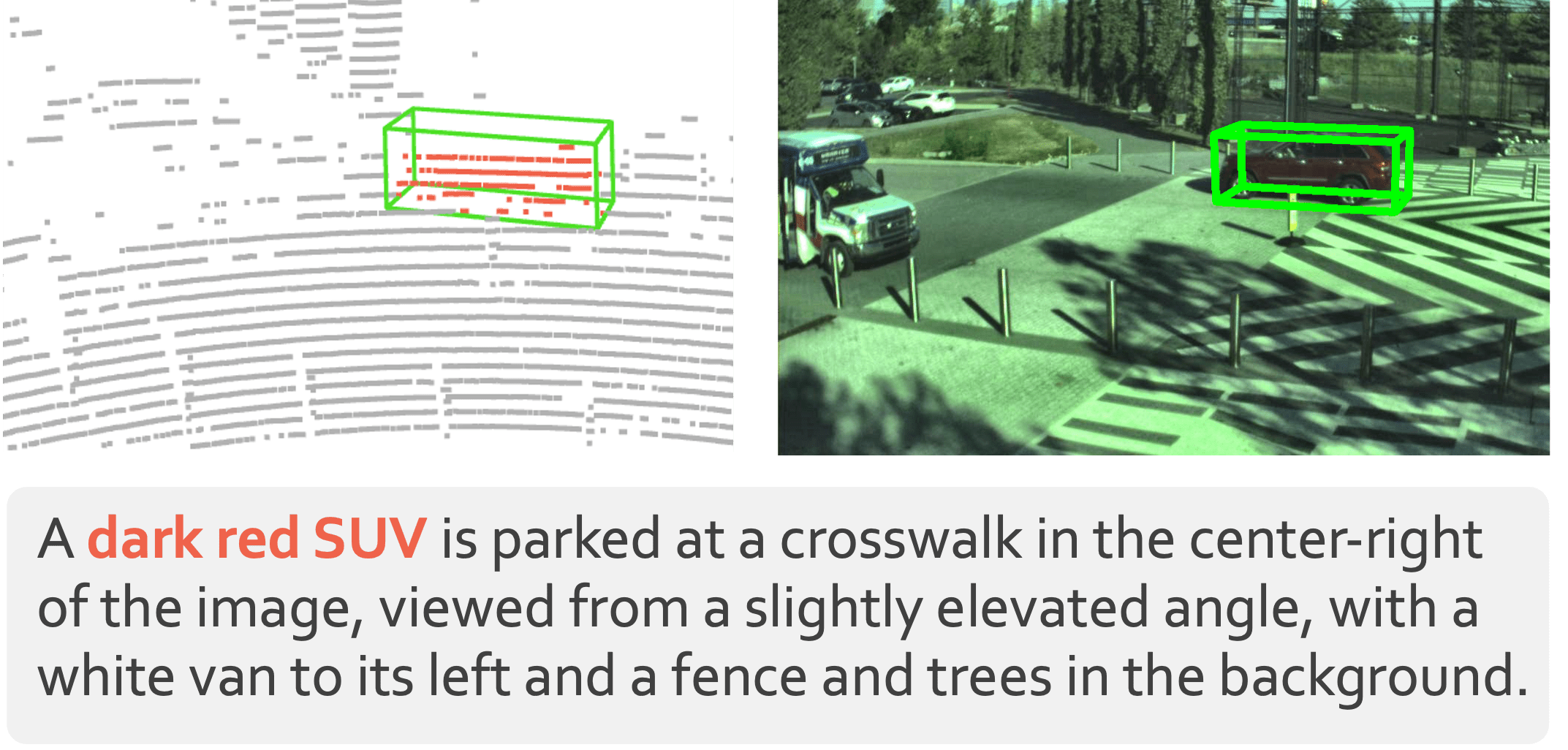

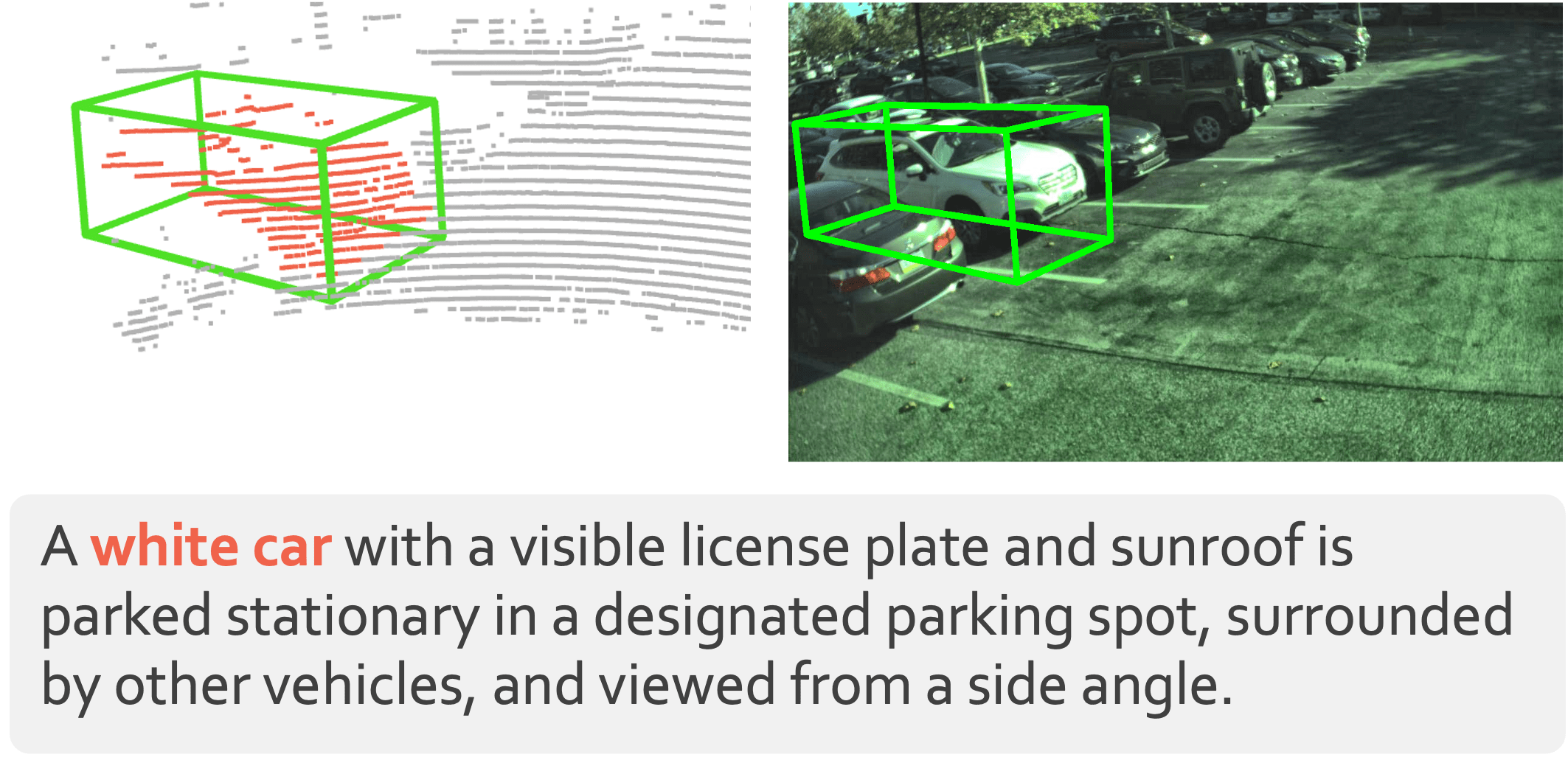

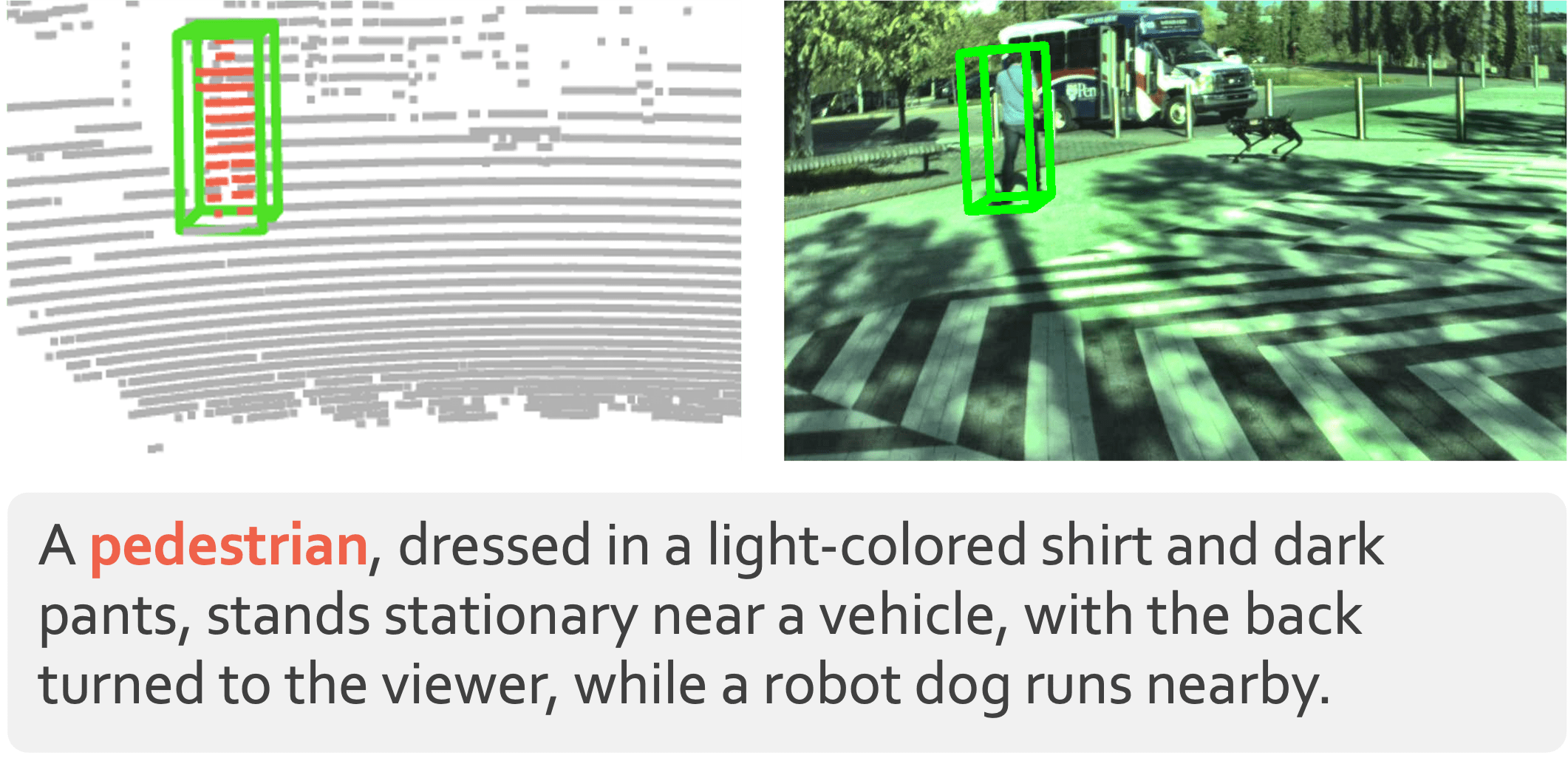

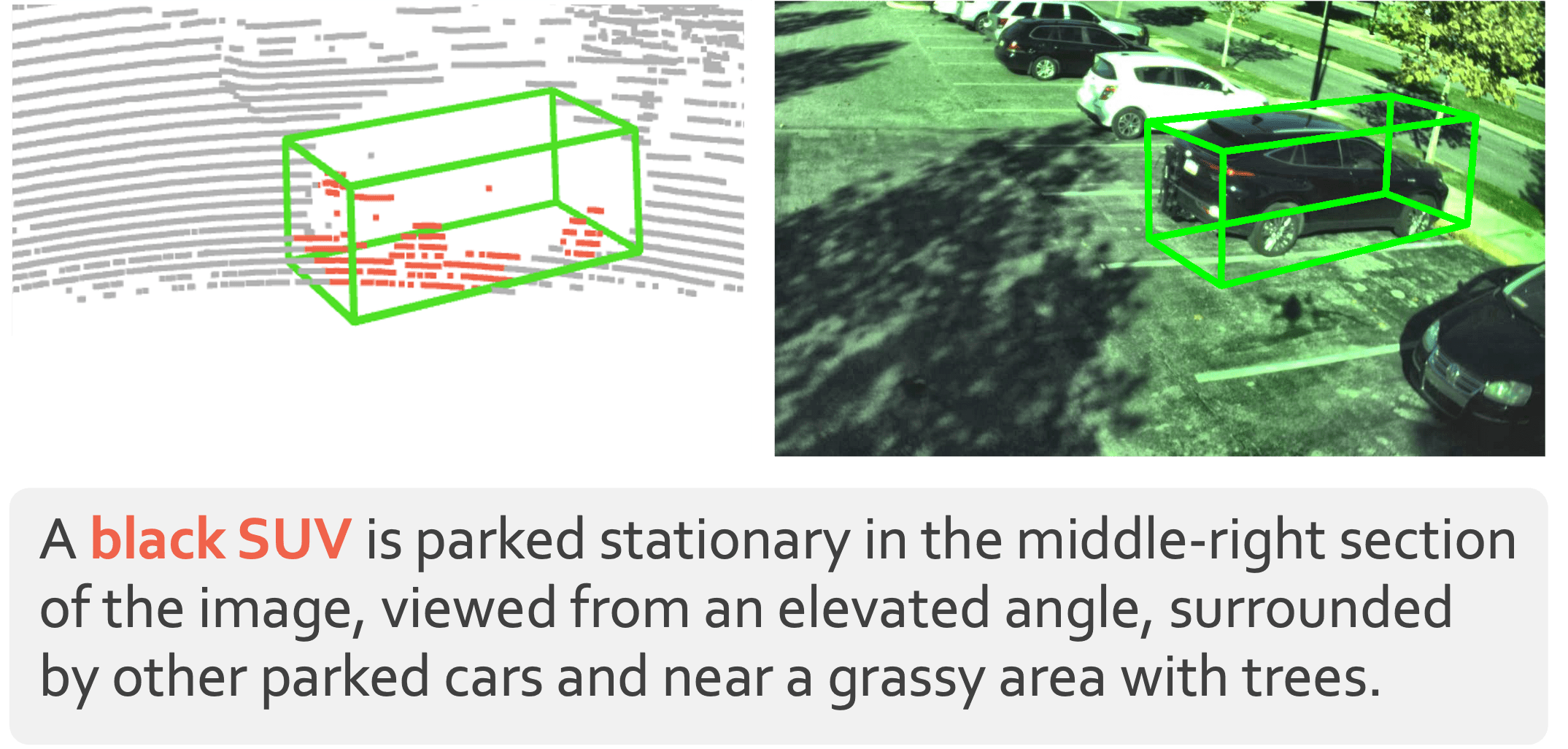

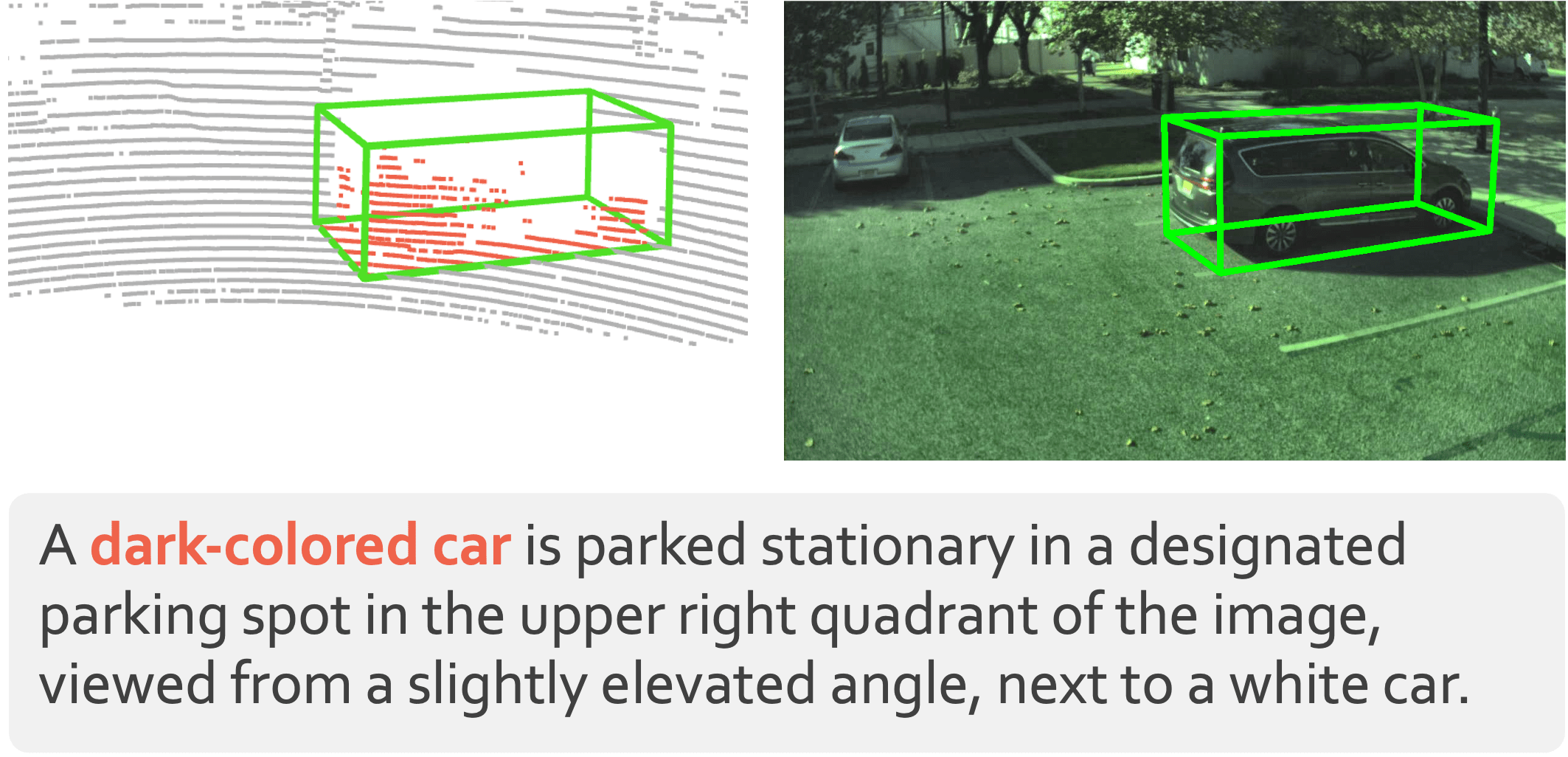

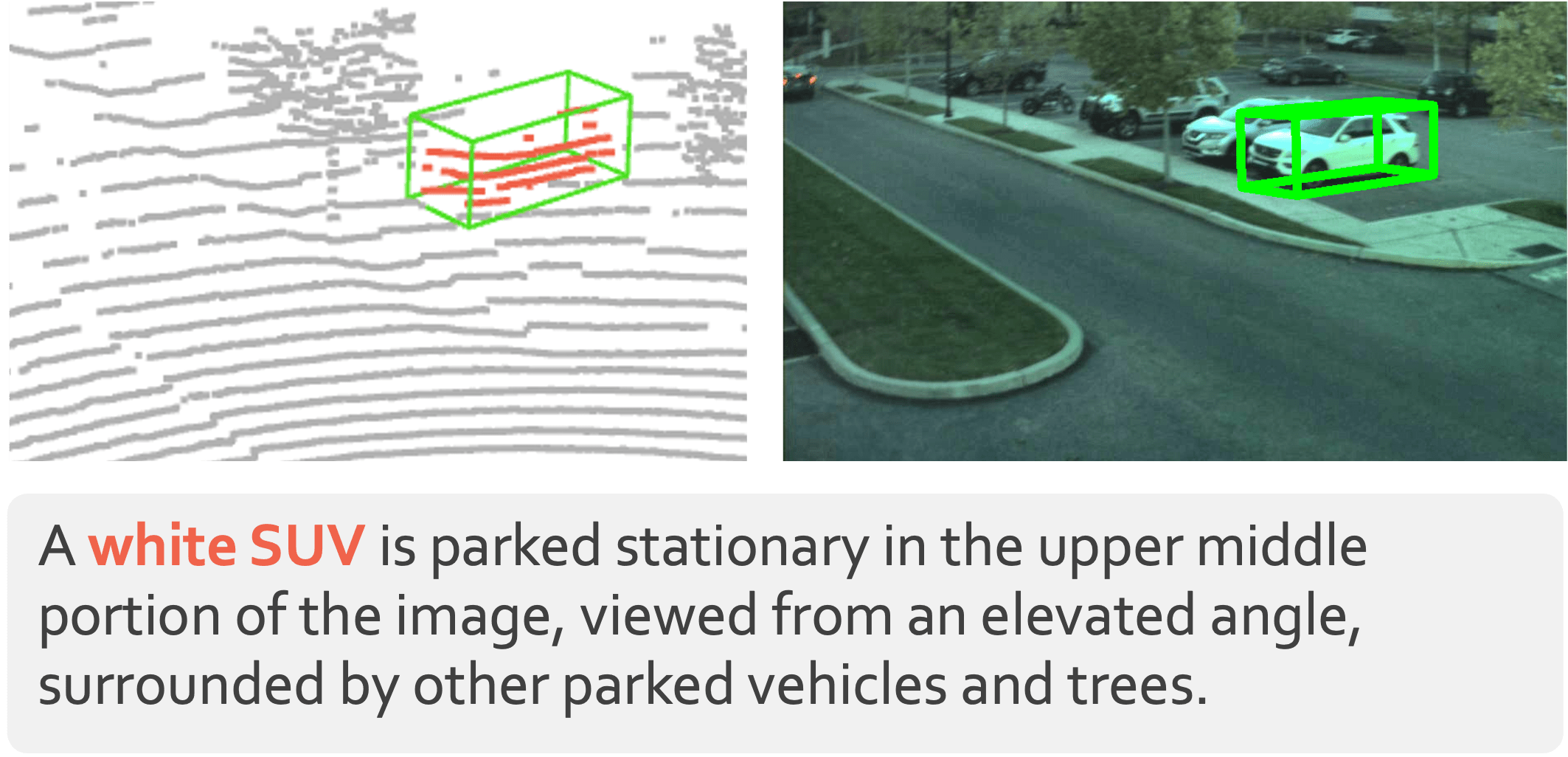

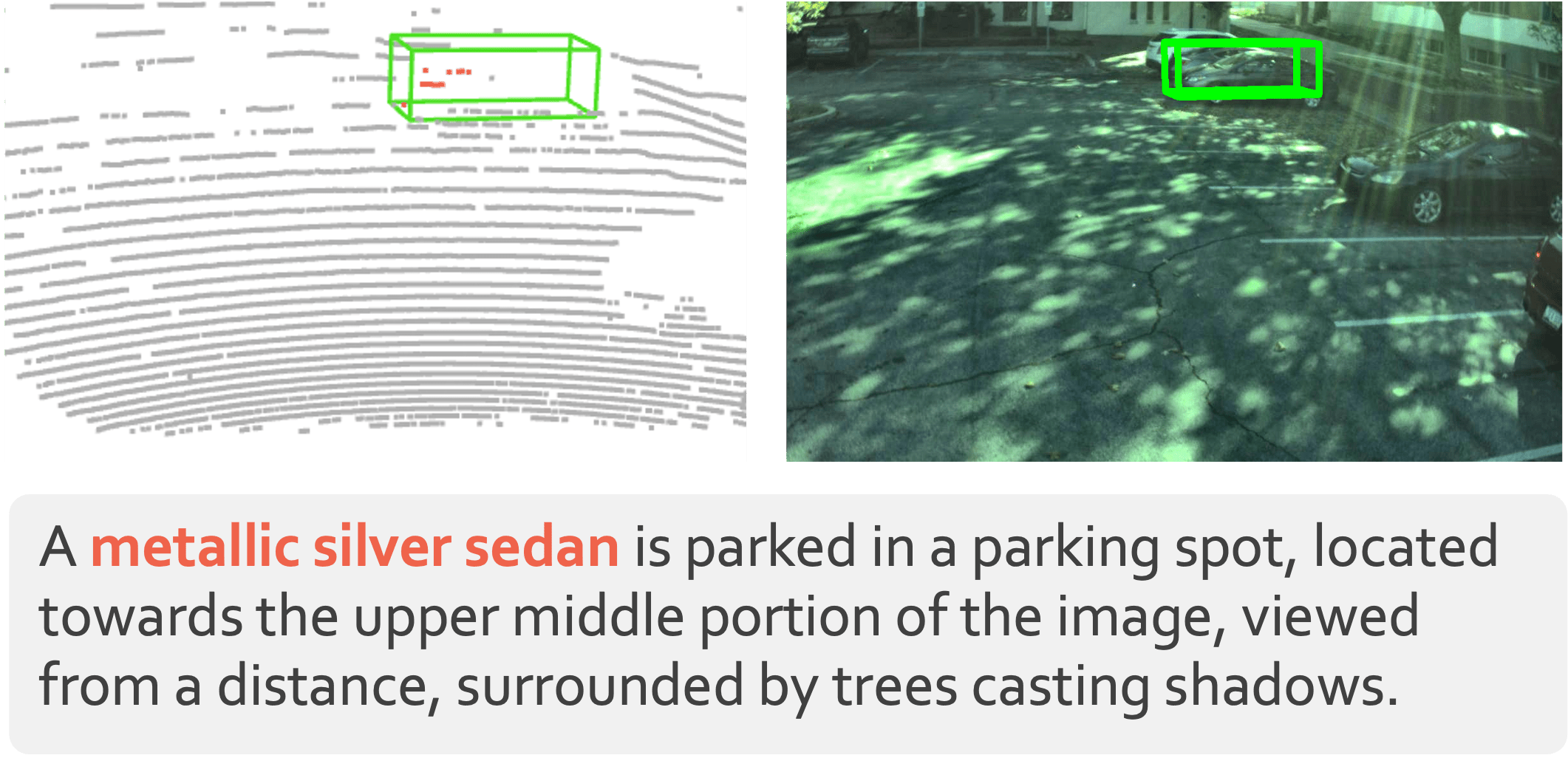

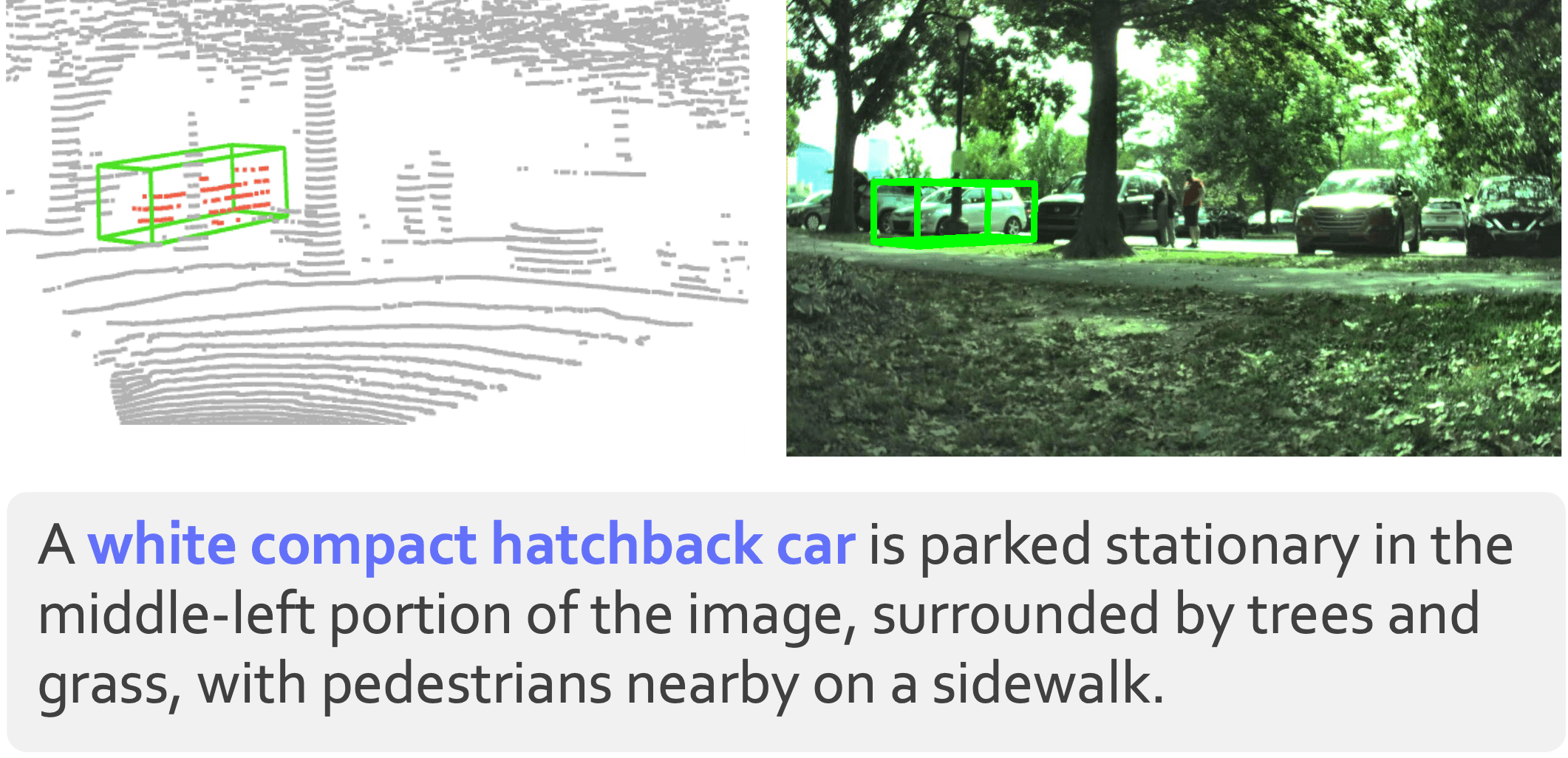

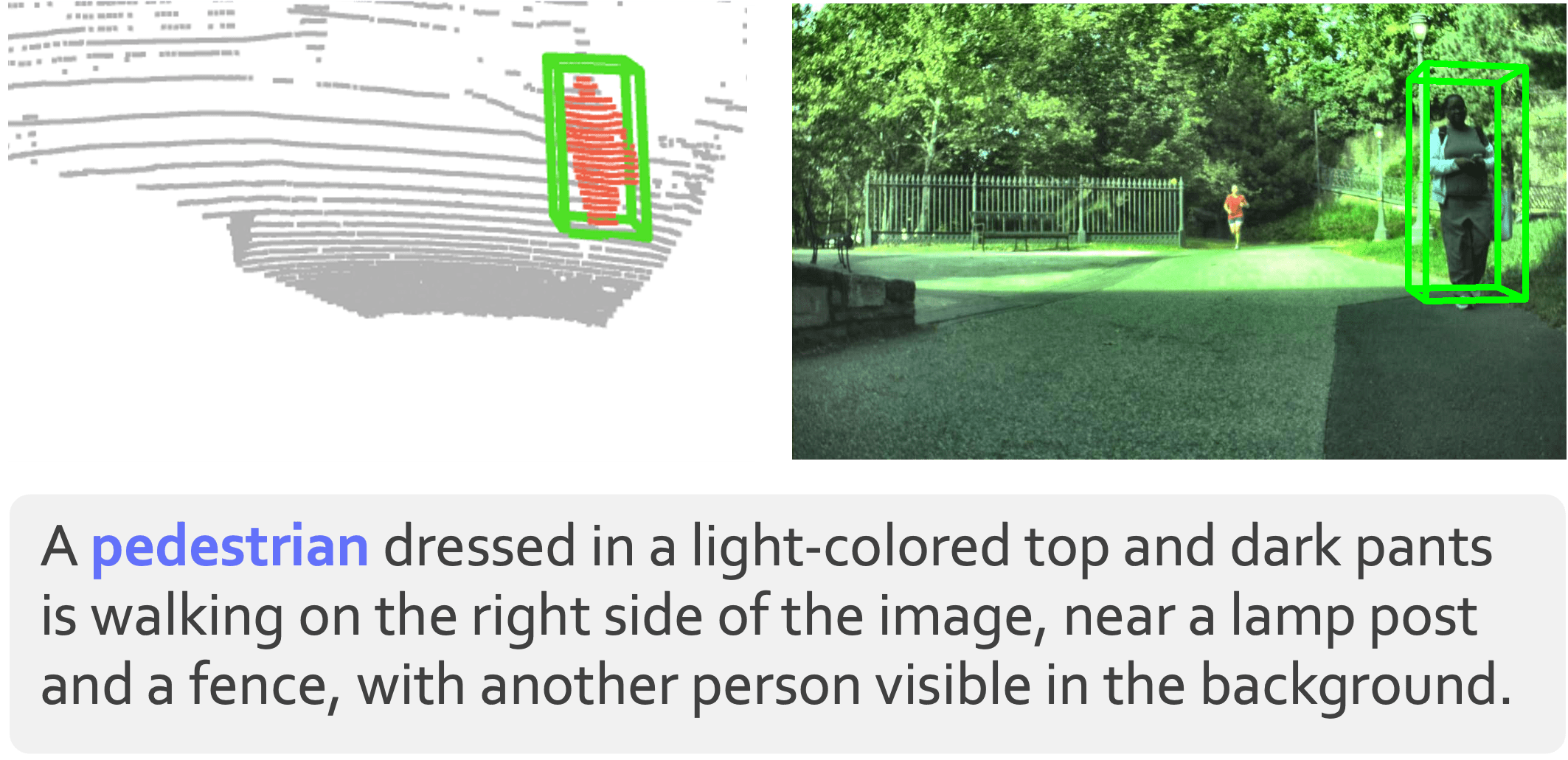

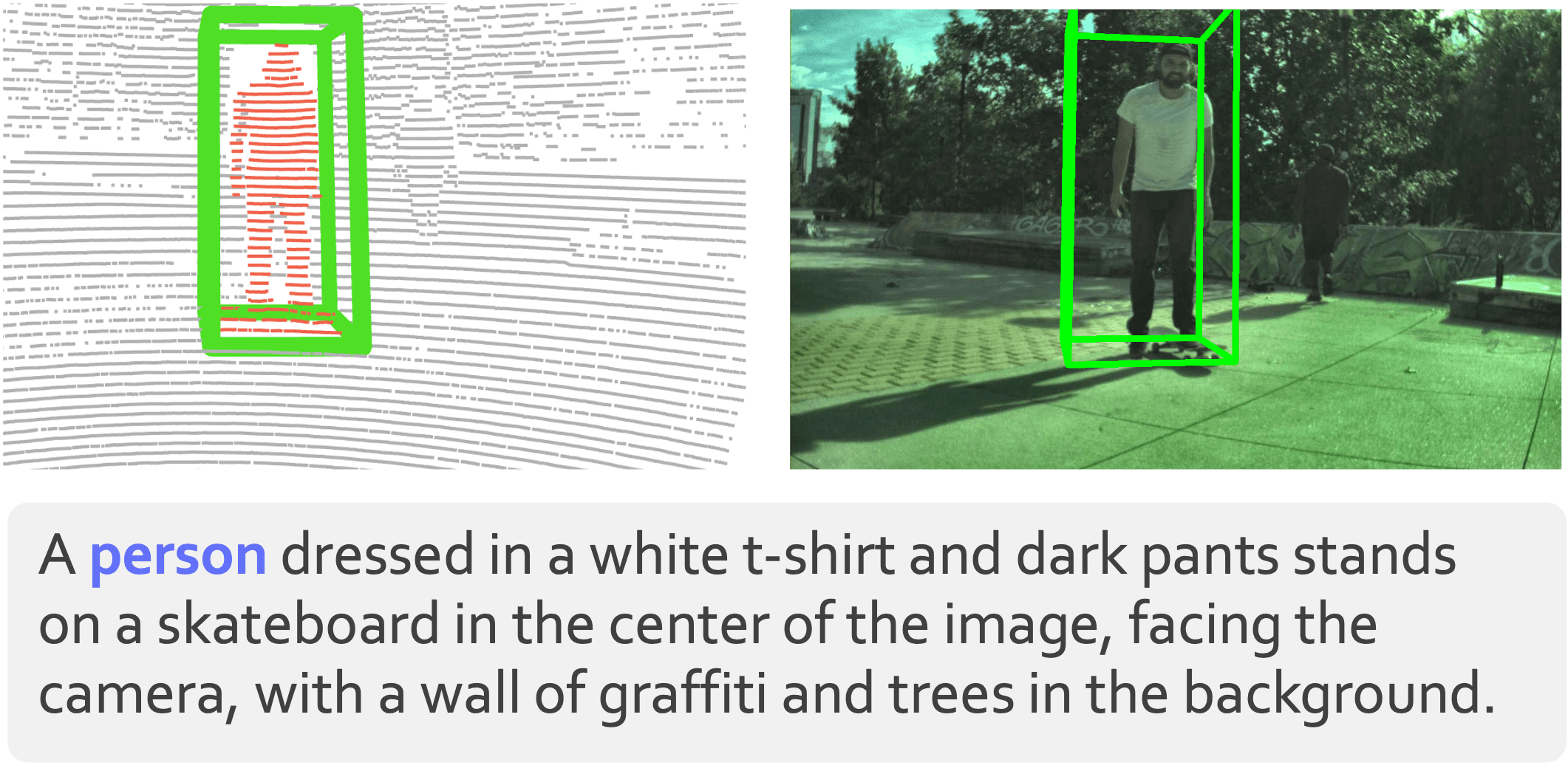

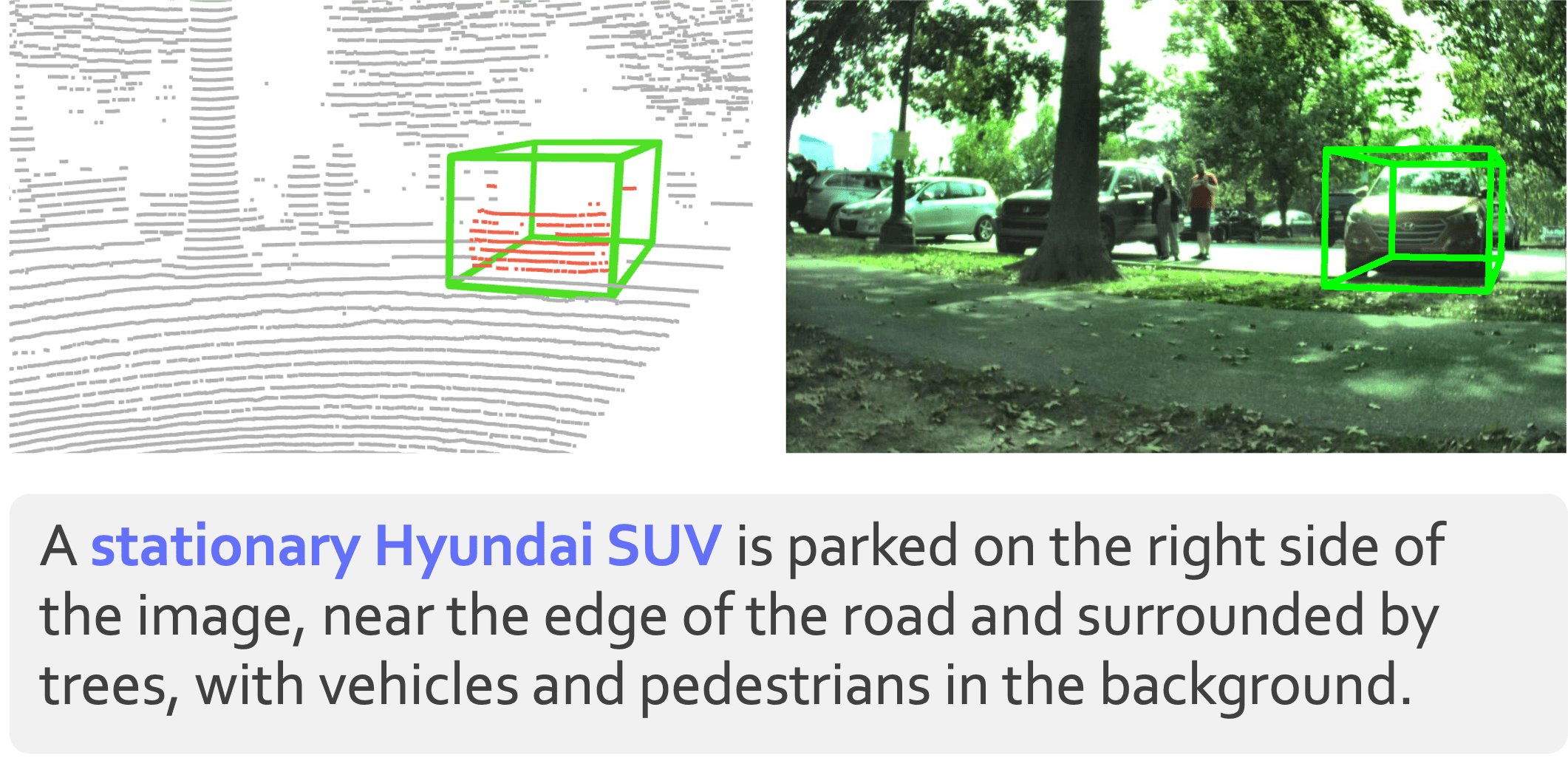

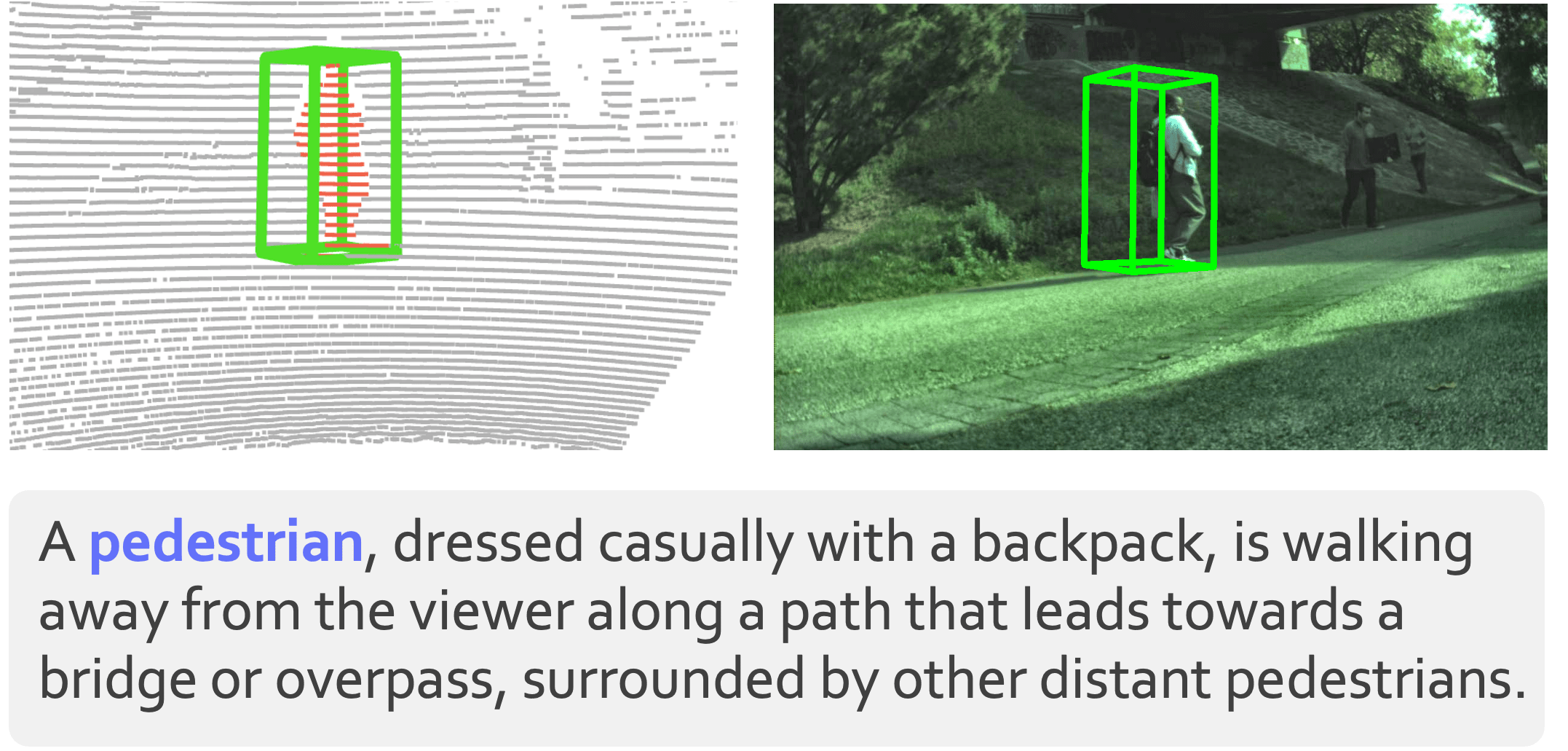

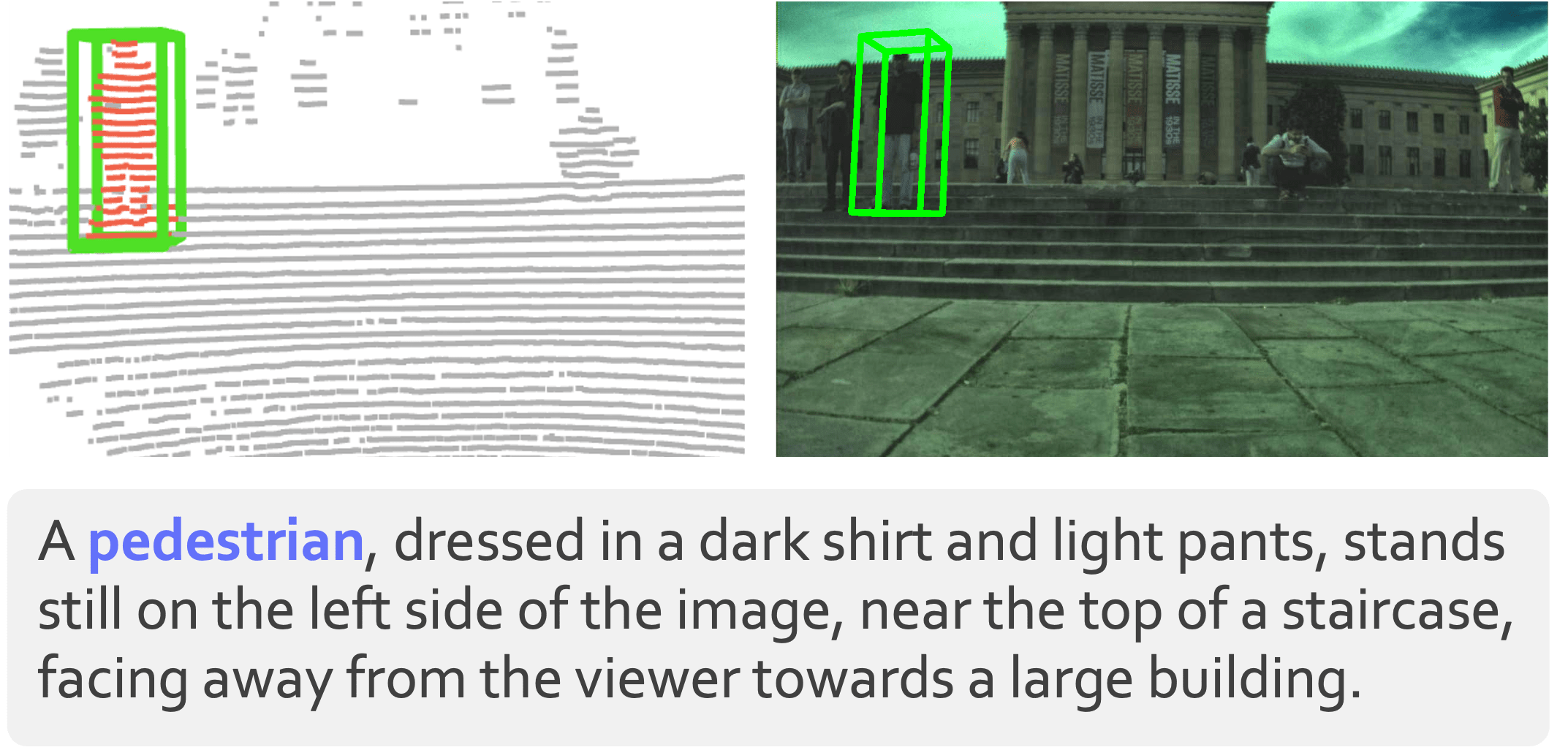

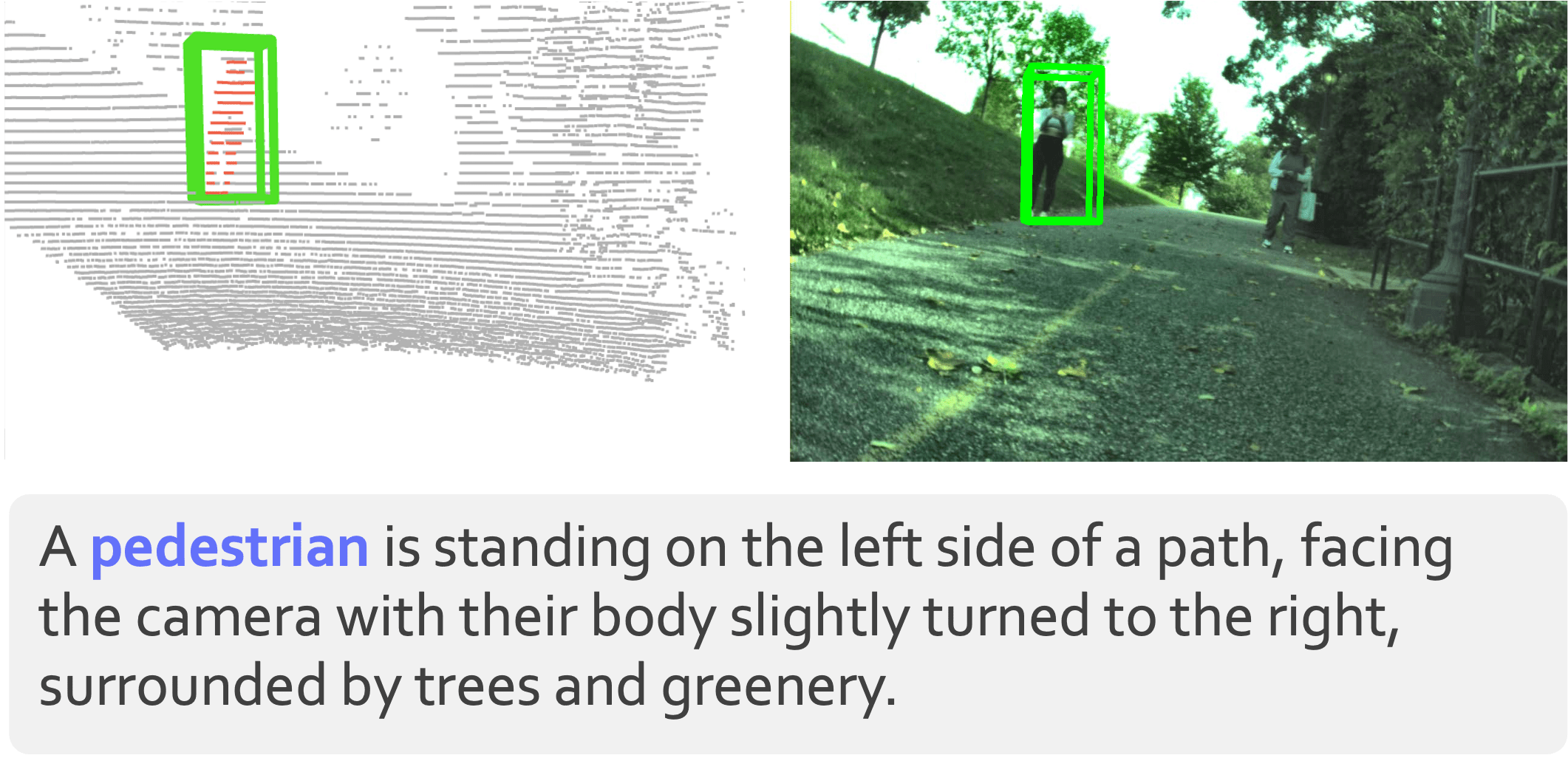

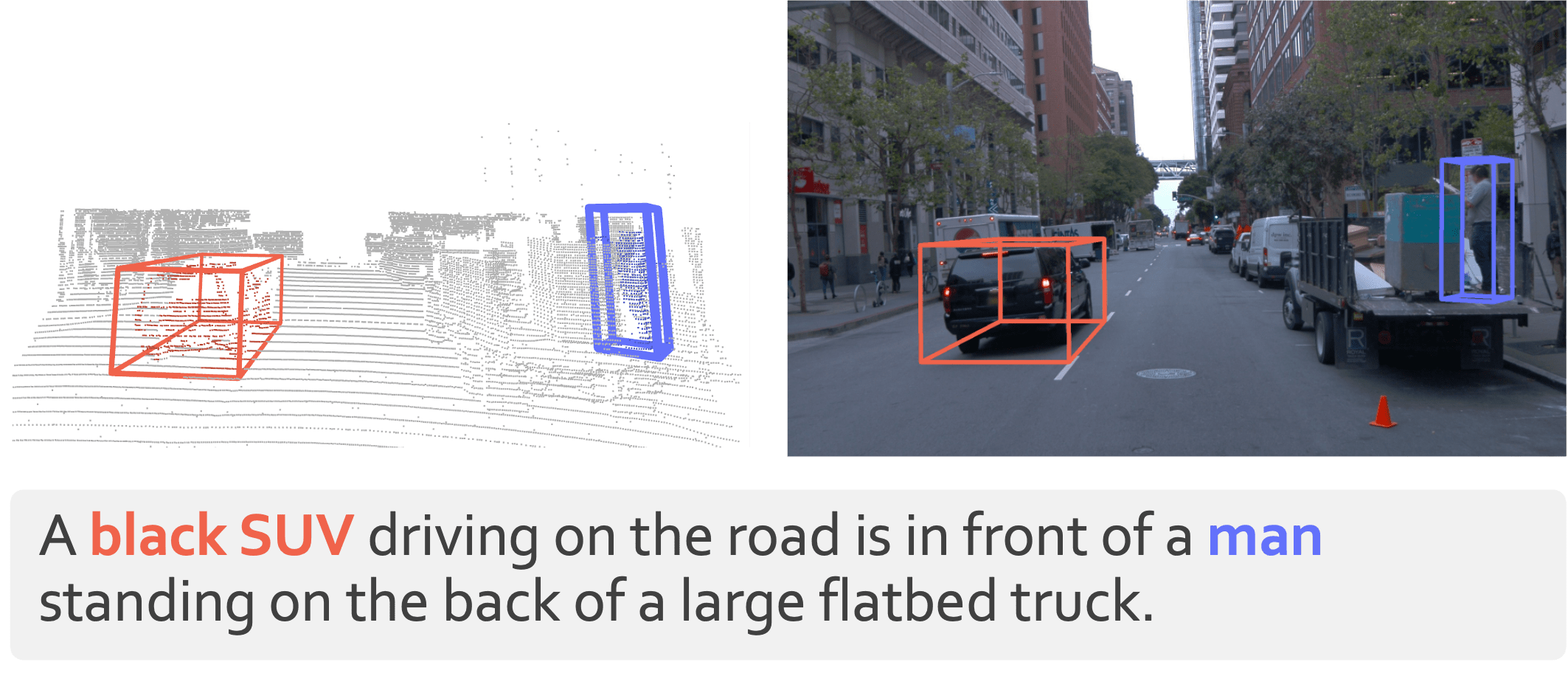

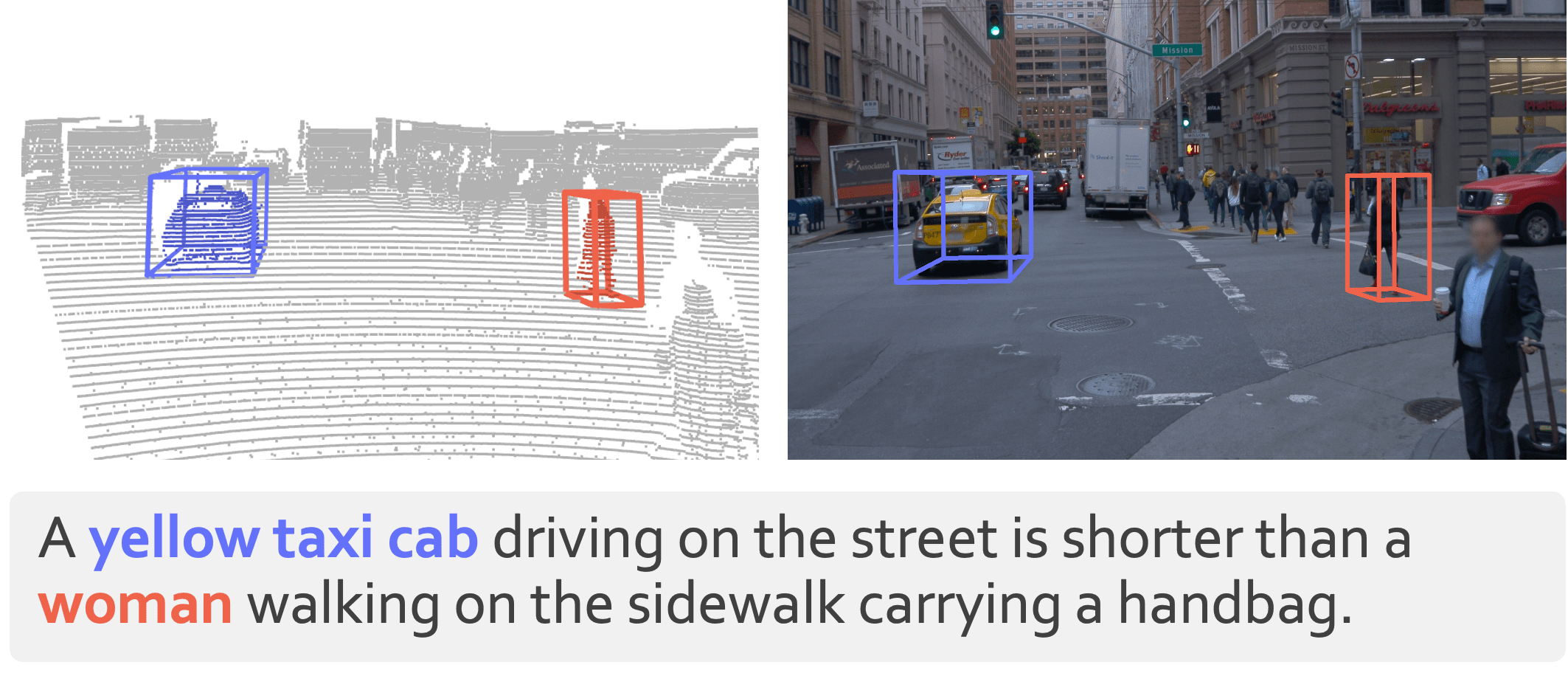

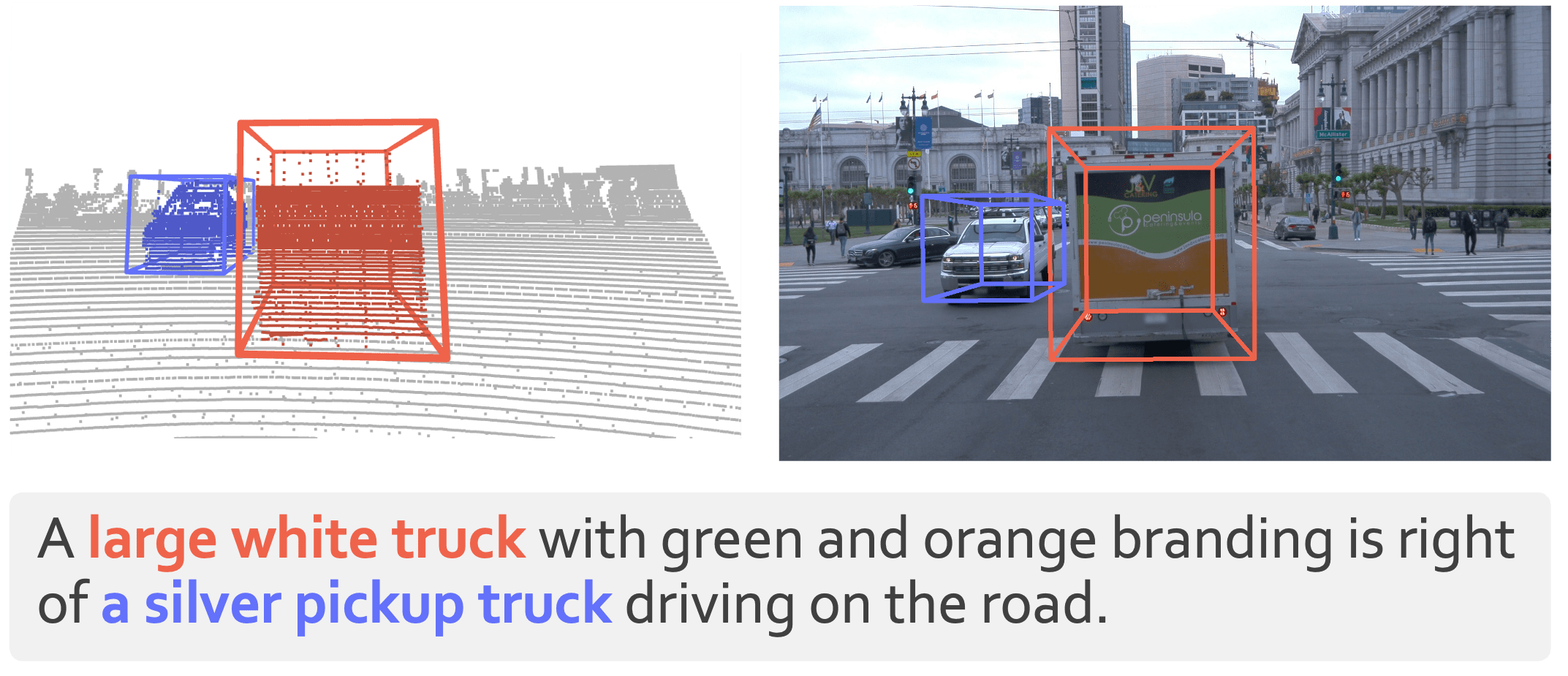

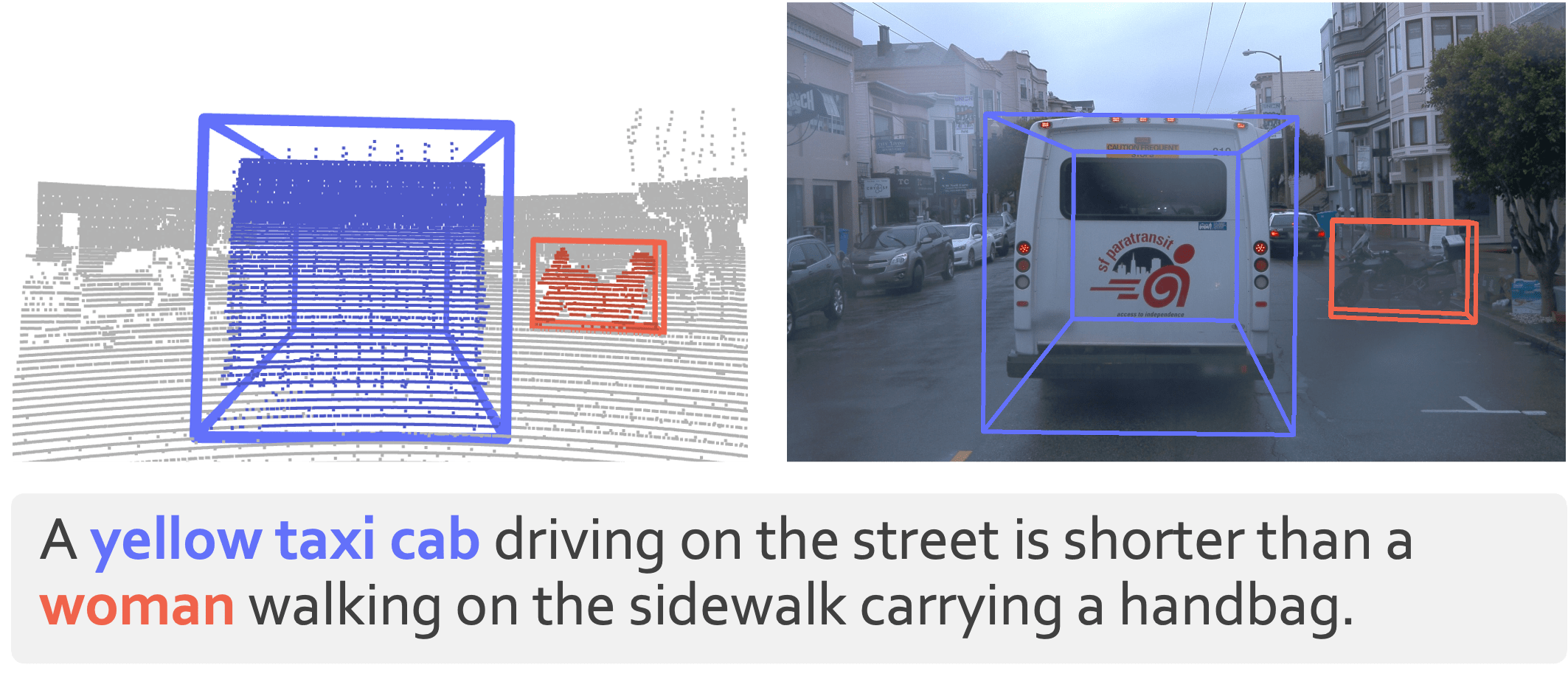

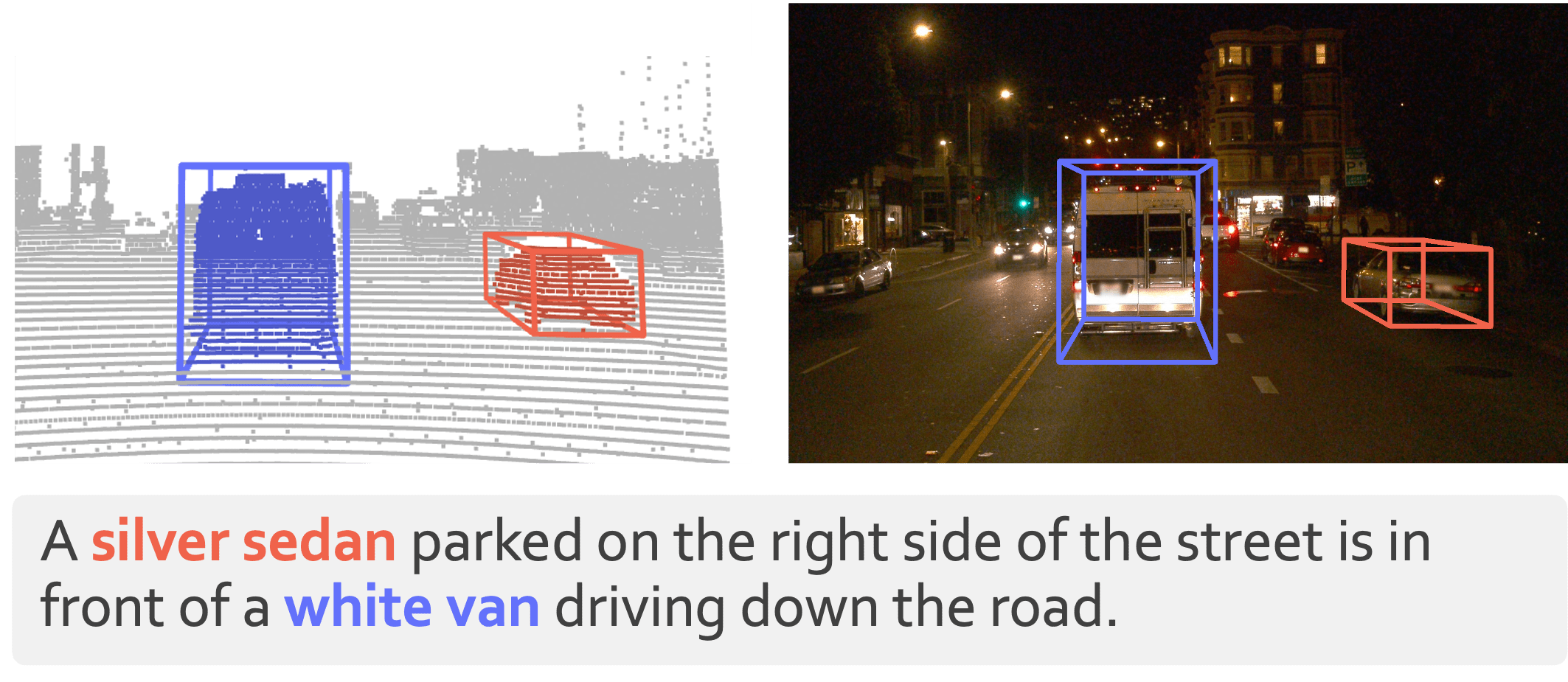

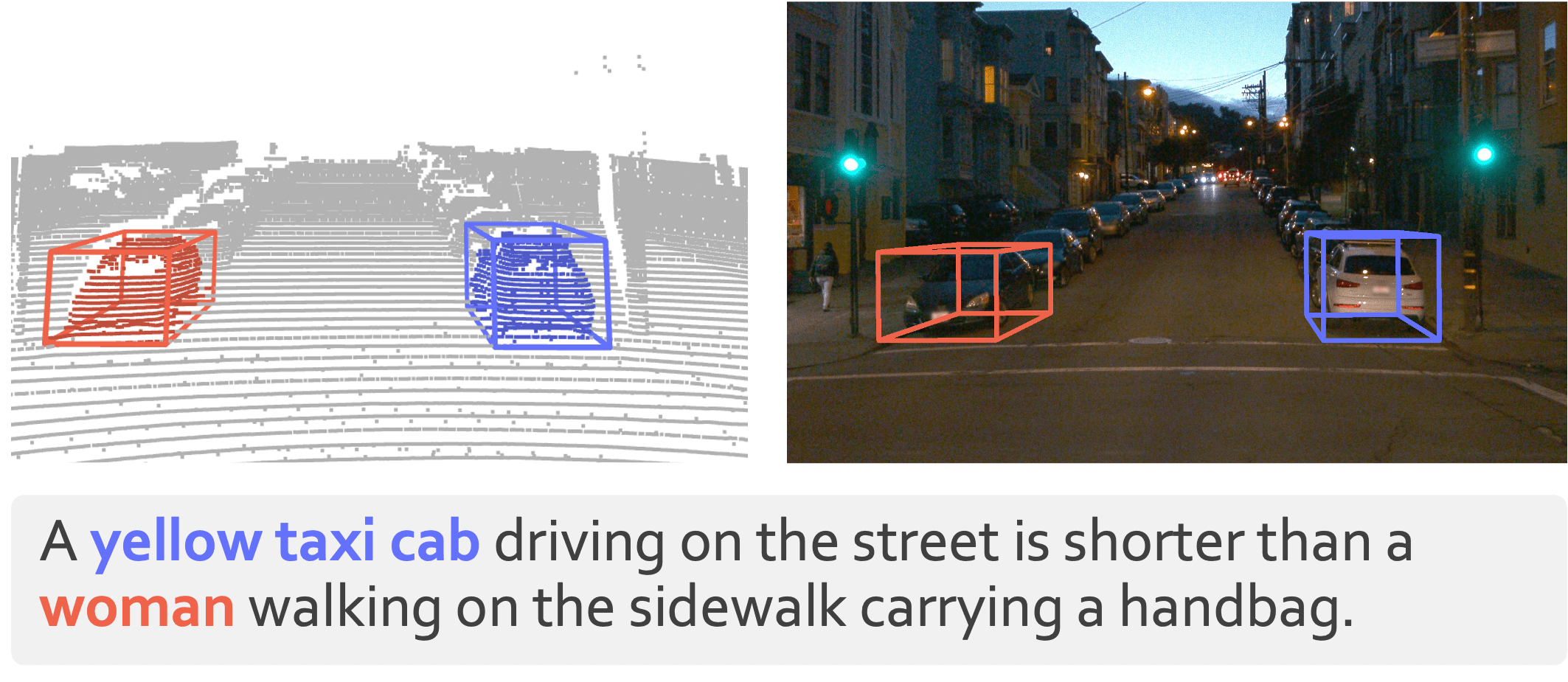

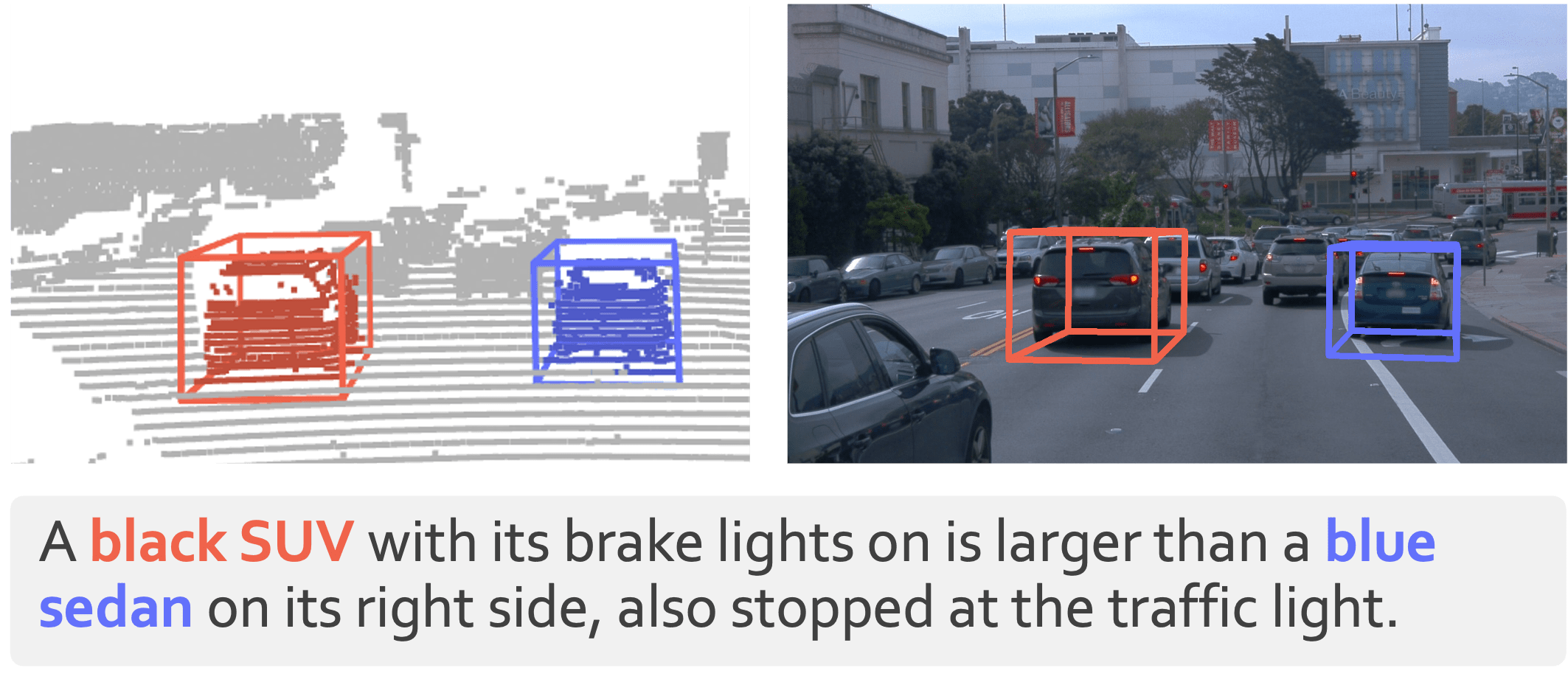

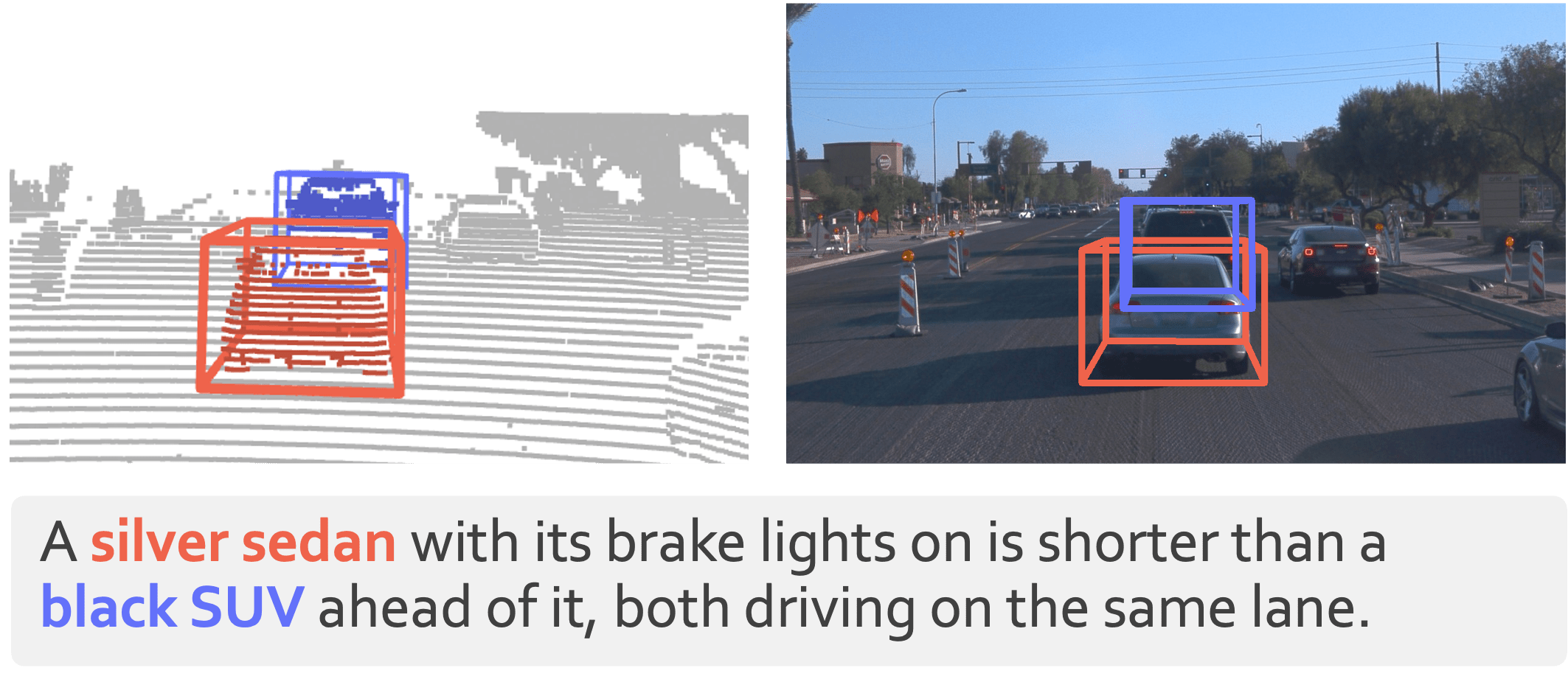

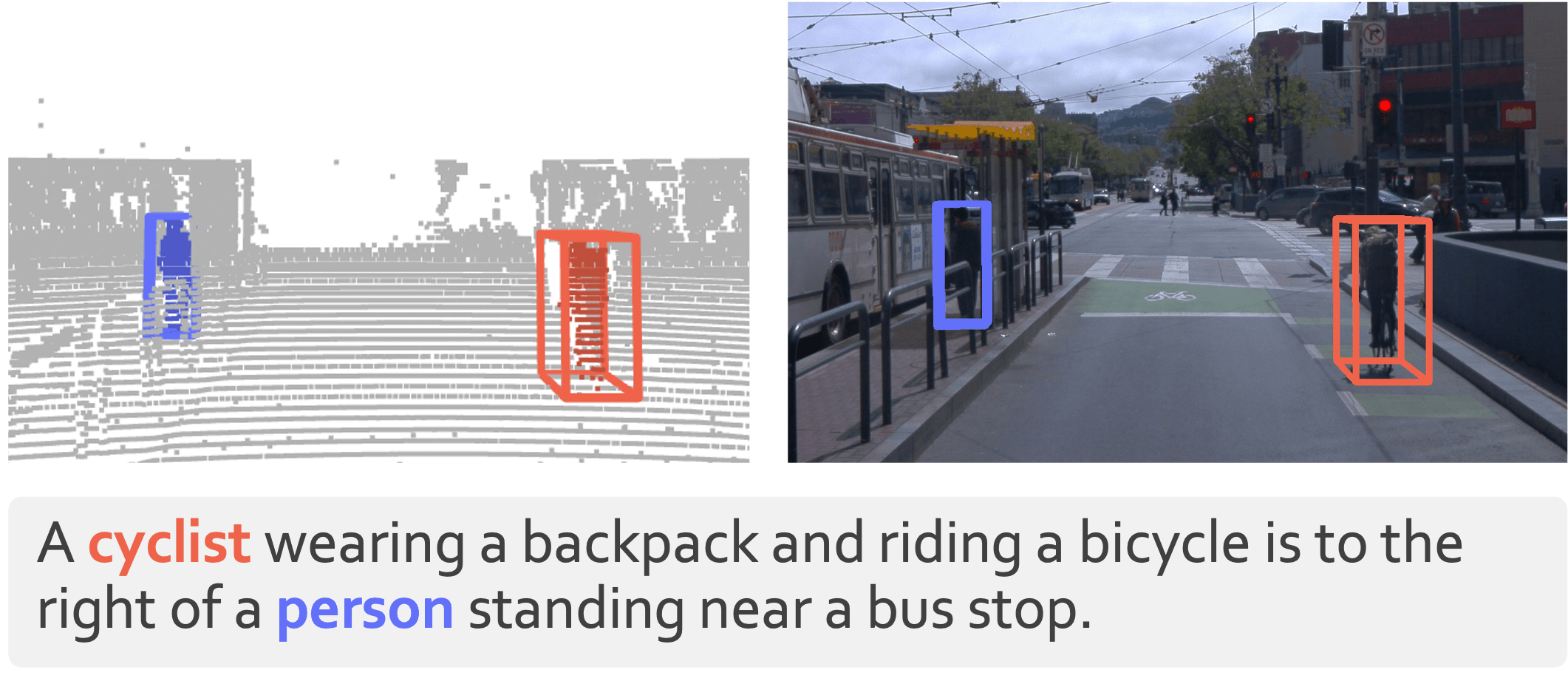

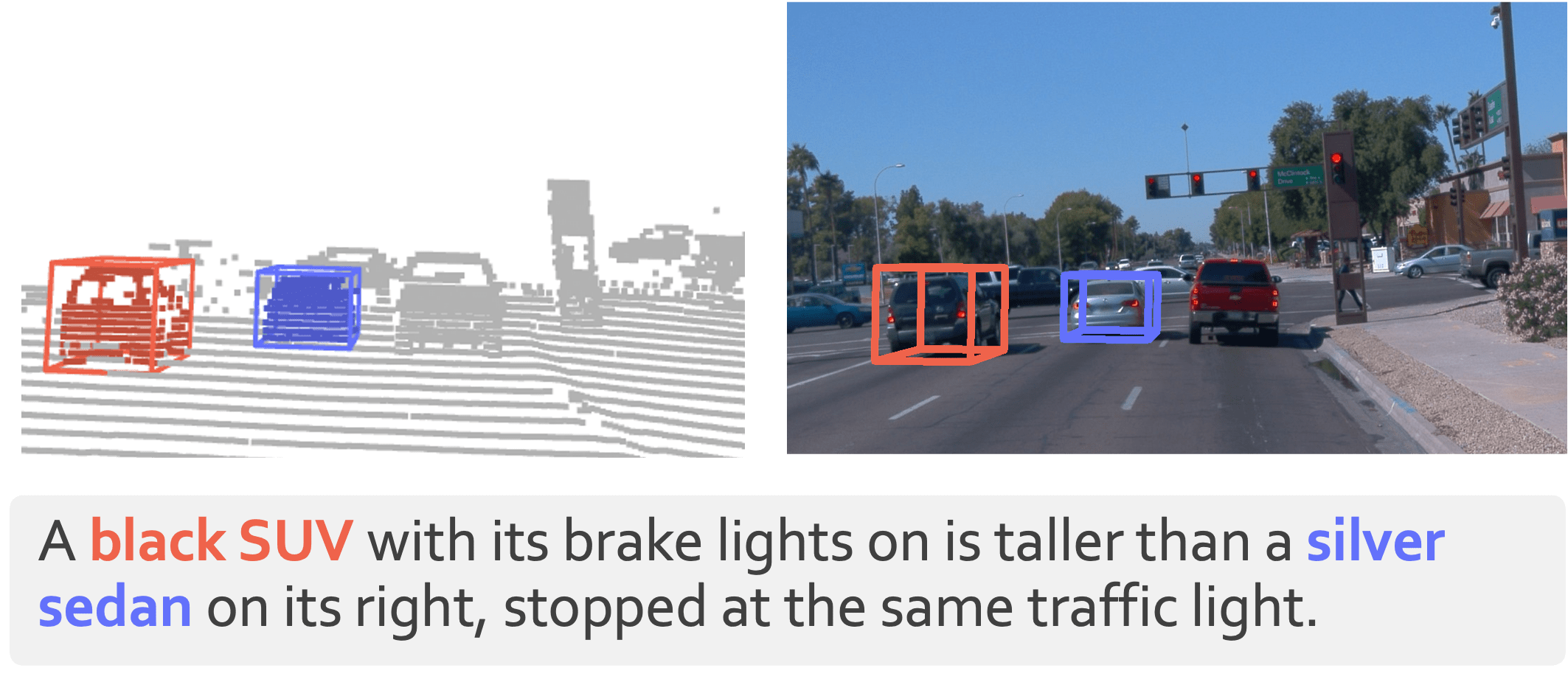

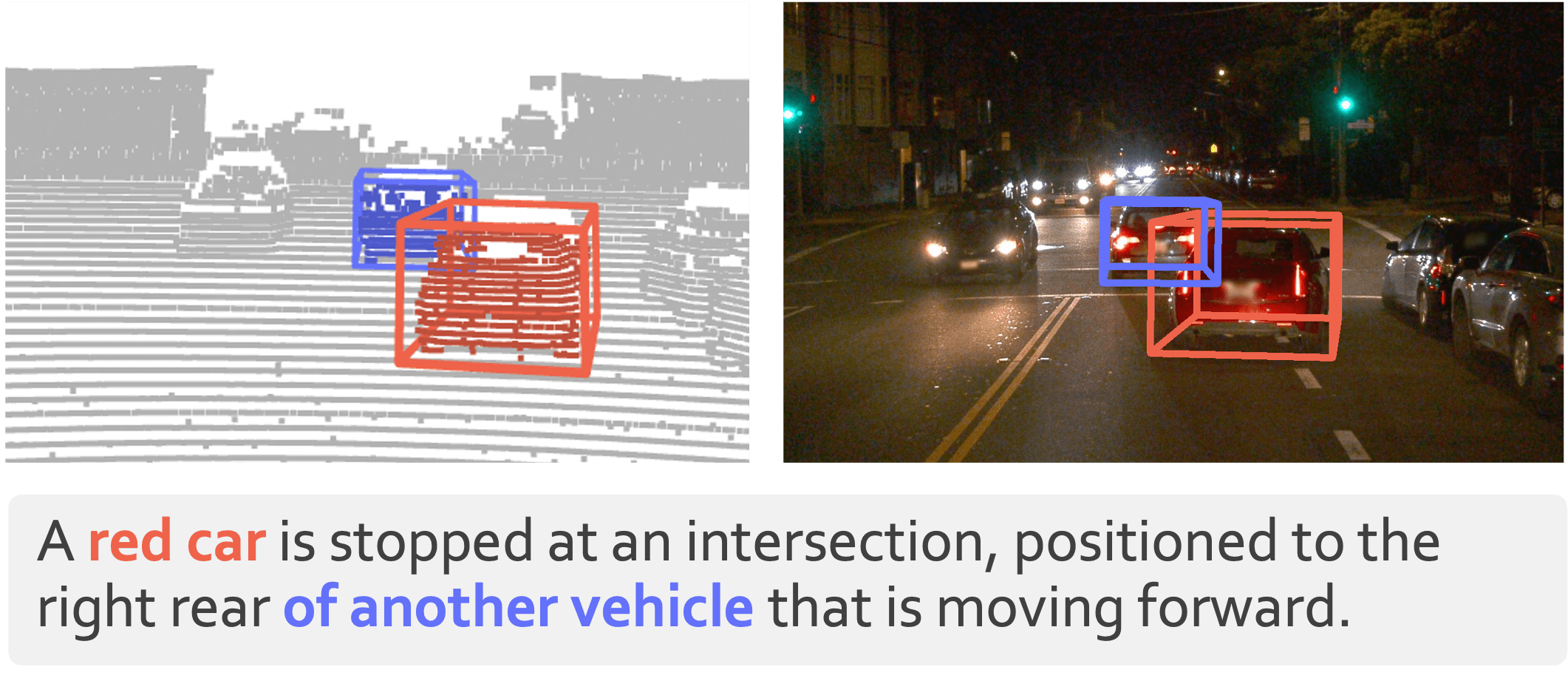

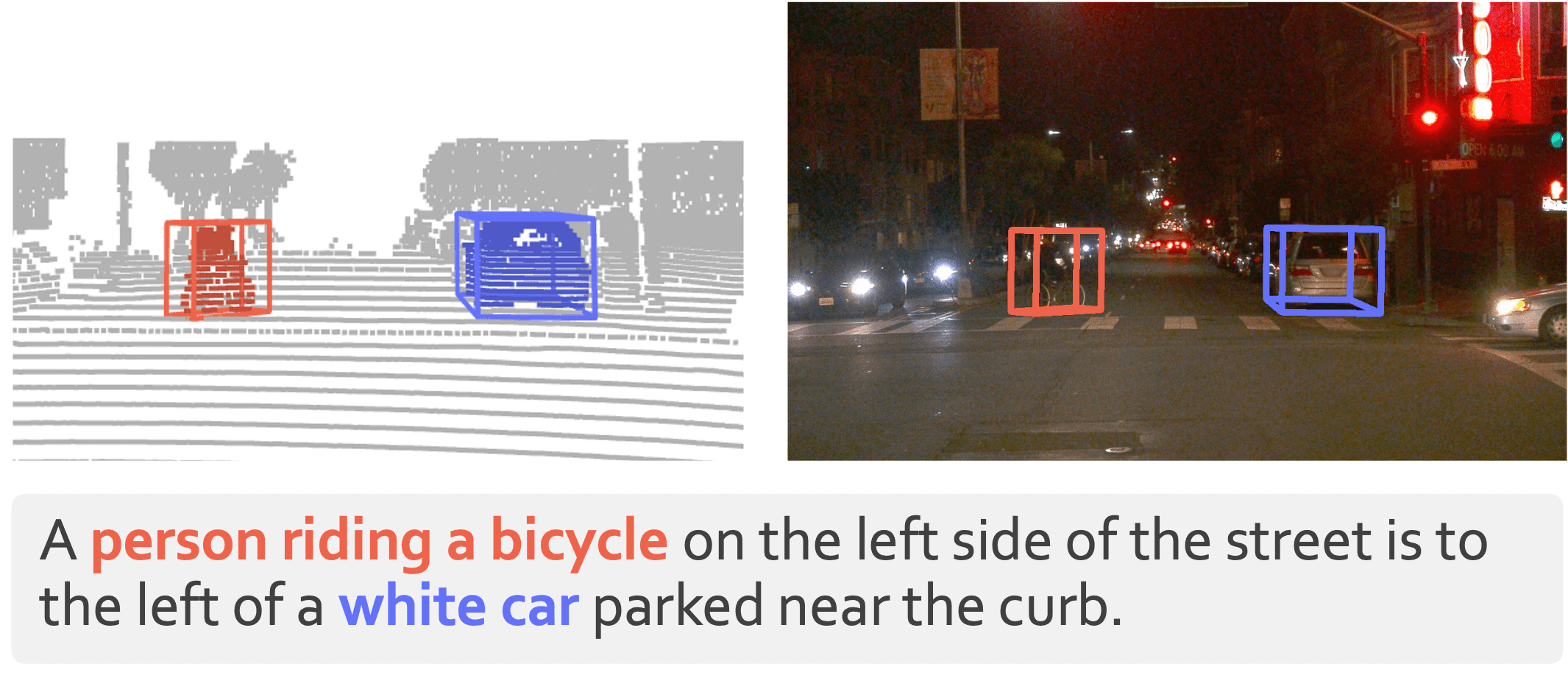

We introduce 3EED,

a multi-platform, multi-modal 3D grounding benchmark featuring RGB and LiDAR data from

Vehicle, Drone, and Quadruped platforms.

We provide over 134,000 objects and 25,000 validated referring

expressions across diverse outdoor scenes -- 10x larger than existing

datasets.

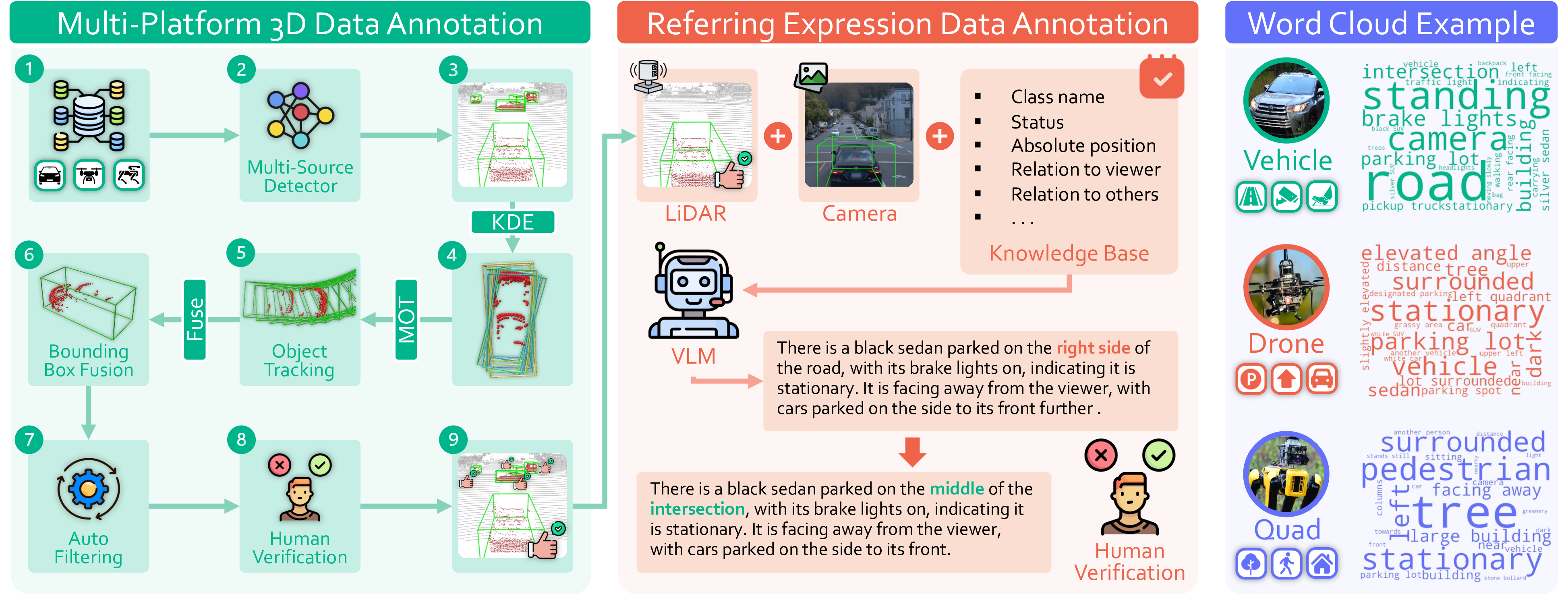

We develop a scalable annotation pipeline combining vision-language model prompting with human

verification to ensure high-quality spatial grounding. To support cross-platform learning, we propose

platform-aware normalization and cross-modal alignment techniques, and establish benchmark protocols for

in-domain and cross-platform evaluations. Our findings reveal significant performance gaps, highlighting

the challenges and opportunities of generalizable 3D grounding. The 3EED dataset and benchmark toolkit are

released to advance future research in language-driven 3D embodied perception.

Vehicle,

Vehicle,  Drone, and

Drone, and  Quadruped,

presenting unique challenges in spatial reasoning, scene analysis, and cross-platform 3D generalization.

Quadruped,

presenting unique challenges in spatial reasoning, scene analysis, and cross-platform 3D generalization.